My 2025 Genpact Content Moderator Interview Process and Questions

Genpact’s content moderator interview process was divided into several stages: application and initial screening, online assessment, HR interview, technical/role-specific interview, and final evaluation and offer.

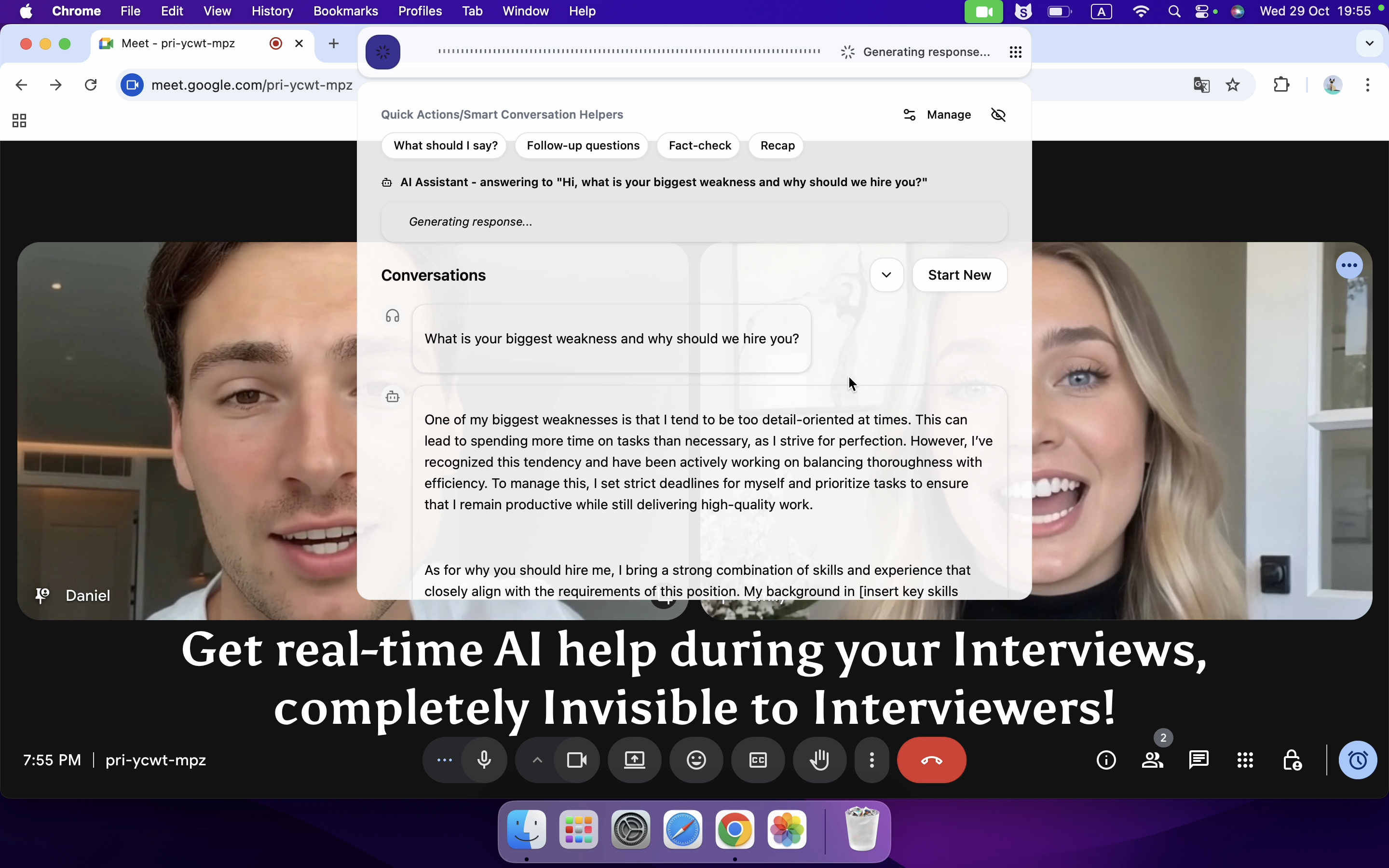

I am really grateful for the tool Linkjob.ai, and that's also why I'm sharing my entire OA interview experience here. Having an undetectable AI assistant during the interview is indeed very convenient.

Genpact Content Moderator Interview Process

Application and Initial Screening

The first step was filling out an online application, and I made sure my resume matched what the role was looking for. A few days later, I got an email letting me know I’d moved on to the next stage.

Online Assessment

After that came the online assessment. It tested my reading, analysis, and quick-decision skills. Some questions asked me to identify harmful or inappropriate content, so I really had to rely on my past experience and judgment. There were also tasks that checked my typing speed and attention to detail.

HR Interview

Once I passed the assessment, I moved on to the HR interview. Most of the conversation focused on my background, why I wanted this role, and how I handle pressure or repetitive tasks. I shared examples from previous jobs to show how I think and work. The interviewer was friendly, which made the whole conversation feel pretty relaxed.

Technical / Role-Specific Interview

This was definitely the most challenging part of the process. I spoke with a team lead who walked me through a few real moderation scenarios. I had to explain how I would handle sensitive cases, make quick decisions, and apply policy guidelines correctly. I also talked about the tools I’ve used before and how familiar I am with different platforms.

Final Evaluation and Offer

In the final stage, Genpact reviewed everything: my interview performance, assessment results, and whether I’d be a good fit for the team, etc. A few days later, I received the offer.

Genpact Content Moderator Interview Questions

I came across a wide range of questions during the interview. I pulled out a few that felt the most common, the kind that almost everyone is likely to be asked.

Genpact Content Moderator Interview Common Questions

Question 1: Can you describe your experience with content moderation tools and software?

Why They Ask: They want to see if I know the tools.

My Approach: During my previous roles, I worked extensively with content moderation platforms to review and manage user-generated content. Tools like internal dashboards and automated flagging systems helped identify potentially harmful material. I became familiar with features for filtering, reporting, and escalating content, and learned to navigate multiple tools simultaneously while maintaining accuracy and efficiency.

Question 2: How do you prioritize tasks when moderating a large volume of content?

Why They Ask: They check if I can handle pressure.

My Approach: When facing a lot of content, I focus on high-risk items first, such as posts that could escalate quickly or violate major guidelines. At the same time, I organize tasks into batches to stay efficient and avoid burnout. Using this approach, I can tackle urgent cases while still reviewing standard content carefully.

Question 3: What criteria do you use to determine whether content violates community guidelines?

Why They Ask: They want to know if I follow rules.

My Approach: I rely on the platform’s policies as the baseline. Posts containing explicit language, hate speech, or threats are clear-cut, but context matters a lot. I consider intent, potential harm, and how the community might perceive the content. Consistency and fairness are my guiding principles in every decision.

Question 4: Can you provide an example of a challenging moderation decision you had to make?

Why They Ask: They test my problem-solving.

My Approach: Once, I encountered content that could be interpreted in different ways. I needed to carefully check context, related reports, and platform rules before deciding. Balancing the guidelines with the potential impact of removing or keeping the content was tricky, but in the end, I made a decision that minimized harm while following policy. Documenting the rationale was also important for accountability.

Linkjob AI is really easy to use. During the interview, I answered questions on my own whenever I could, and for the ones I wasn’t sure about, I used the AI’s responses. Before the interview, I tested its stealth features with a friend, and the interviewer couldn’t see anything at all.

Background Questions

These questions helped the interviewer see if I had the right skills and attitude for the job. I wanted to show that I understood what a content moderator does every day.

Here are some background questions I faced:

What experience do you have with content moderation?

Have you worked as a content moderator before?

Can you share a time when you had to make a tough decision at work?

How do you handle stress or pressure?

I answered these questions by sharing real stories from my past jobs. I talked about how I learned to spot harmful posts and how I stayed calm when things got busy. I also explained how I used content moderation tools to keep online spaces safe.

Why Content Moderator

The interviewer wanted to know why I chose this job. I told them I wanted to help make the internet safer for everyone. I explained that I care about online communities and want to protect people from harmful content.

I also said that content moderation lets me use my skills in a real way. I like working with rules and making fair choices. Being a content moderator means I can help others and learn new things every day.

Handling Sensitive Content

I also got hit with some tricky scenario-based questions in the interview. One of them asked how I’d handle sensitive content. It specifically described a post with hate speech and graphic images, and I had to explain my approach as a content moderator. Here’s how I responded:

First, I’d stay calm and stick strictly to the company’s content moderation guidelines. I always start by checking the platform’s rules thoroughly. If the content is clearly harmful, I’d remove it right away. If it’s a gray area, I’d rely on my training and reach out to my team for support.

Genpact uses AI-assisted workflows to help moderators spot risky posts. The AI flags potential violations, but I still need to use my own judgment to make the final call. I also learned that Genpact really cares about moderators’ wellbeing. They offer support when we’re dealing with disturbing content. So I always remind myself to take breaks, and if a scenario feels too heavy, I’ll talk to someone to process it.

Decision-Making

Decision-making came up a lot in my content moderator interview. I got several interview questions about borderline cases. For example, the interviewer asked what I would do if a post almost broke the rules but not quite. I explained that Genpact expects content moderators to blend AI tools, policy enforcement, and human judgment. I always start by reviewing the community guidelines. If the AI flags something, I double-check it myself. Sometimes, I need to consider local online standards or cultural context.

Here’s how I approach these questions:

Review the platform’s policies and community guidelines.

Check if the AI flagged the content for a reason.

Think about the cultural context and local standards.

If I’m unsure, I escalate the case to a senior moderator.

I always document my decision for future reference.

Content moderation is not just about following rules. It’s about protecting users and making fair choices. When I answer interview questions about decision-making, I show that I can balance technology, policy, and empathy.

Genpact Content Moderator Interview Insights

Role Expectations

The company expects a lot from its moderators. So I needed to do more than just remove bad content. I had to protect users, follow community guidelines, and keep up with new trends in content moderation.

Some key expectations I faced included:

Using my training to spot harmful content fast.

Making fair decisions, even when the rules felt unclear.

Staying updated on new risks and changes in online behavior.

Working with a team and sharing what I learned in training.

Handling sensitive topics with care and respect.

My Top Tips for Interview Success

Practice with real interview questions, not just generic ones.

Prepare strong, clear answers.

Stay calm and remember that every interviewer wants to see my best.

FAQ

What should I focus on when preparing for a genpact content moderator interview?

I review my training and think about how I would handle tough scenarios.

How do I handle unexpected interview questions during the interview?

I use what I know from common interview questions. Sometimes, I ask for a moment to gather my thoughts.

How important is experience with content moderation tools?

Experience with content moderation tools is pretty important. During my Genpact content moderator interview, I talked about the specific platforms I’ve used in the past. I also shared how my training has helped me pick up new software quickly. Interviewers want to see that I can adapt to different tools efficiently.

See Also

My Experience of the Databricks New Grad Interview Process in 2025

How I Passed the Microsoft HackerRank Test in 2025 on My First Try