How I Aced the Goldman Sachs Software Engineer Online Assessment (OA) in 2025

I still remember refreshing my inbox and seeing the subject line:

“Goldman Sachs – HackerRank Online Coding Assessment”.

For me, that email was both exciting and terrifying. I’d heard all kinds of stories about the Goldman OA: “just two easy questions”, “super math-heavy”, “the MCQs are brutal”… none of them fully matched what I actually saw. So in this write-up I’ll walk through:

What my 2025 OA looked like (structure, timing, platform)

The two real coding questions I got and how I solved them

How the OA fed into later rounds (DSA, big-data design, system design, behavioural)

Concrete prep tips and problem lists that actually helped

Everything below is based on my own experience, plus checking it against recent reports and official prep resources so it’s not just “one random story on the internet”.

My Goldman Sachs OA Timeline (2025)

Role: Software Engineer / Engineering Summer Analyst

Location: EMEA office (remote OA)

Platform: HackerRank, fully proctored – webcam + screen + ID check (very standard now)

Structure in my round:

2 coding questions (LeetCode Easy–Medium level)

~10 CS/Math MCQs (DSA basics, complexity, simple probability / math)

Total time: ~90 minutes, with sub-timers per section

This roughly matches what other 2024–25 candidates report: Goldman typically uses a HackerRank OA with two DSA questions plus MCQs for engineering roles, sometimes 60, 90 or 120 minutes depending on program and region.

The OA was heavily proctored (camera, screen, tab-switch detection), so from the beginning I treated it exactly like a closed-book exam: no notes, no external websites, just me and the editor.

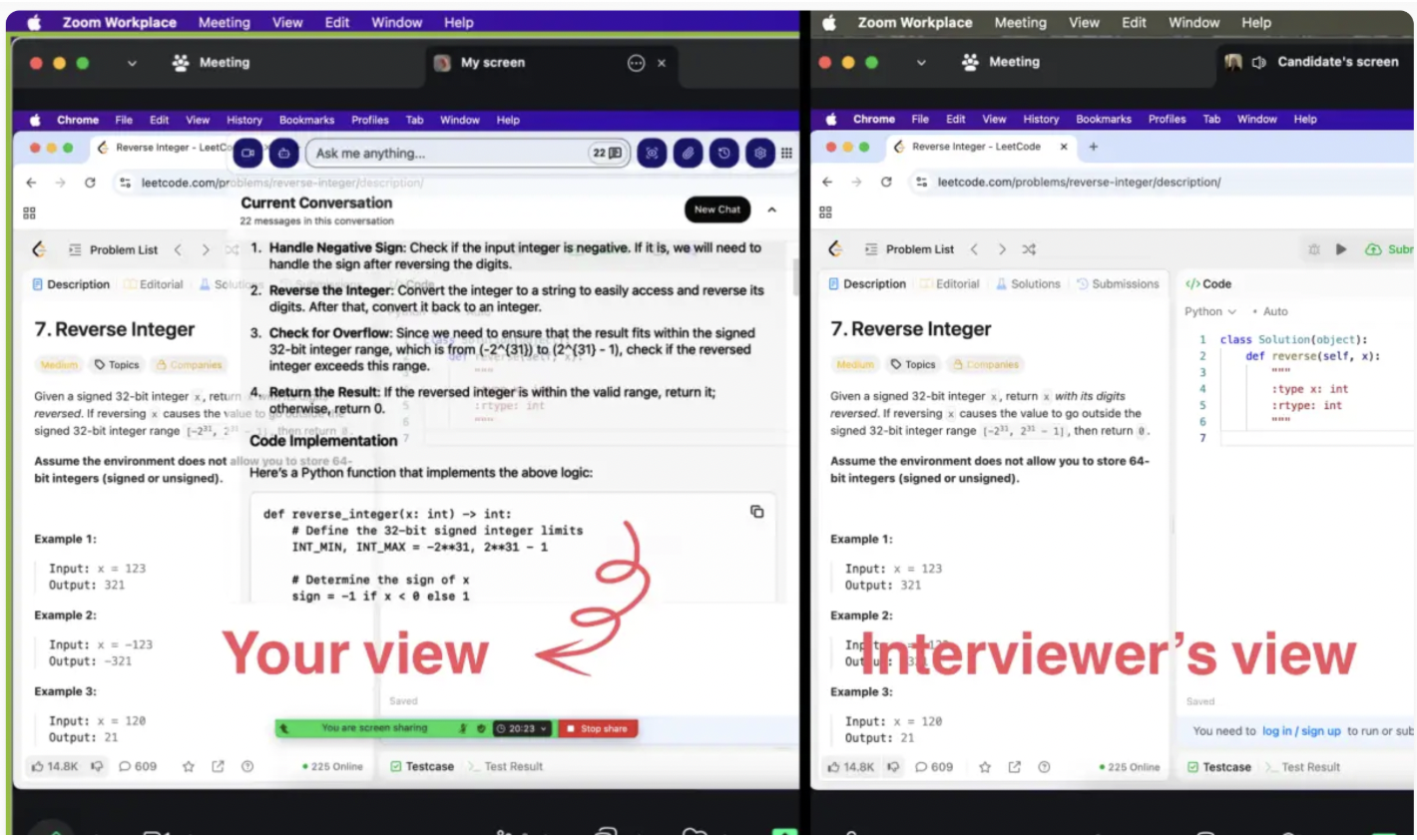

HackerRank Code assessment employs certain detection methods, such as 'suspicious score' detection. Because of this, I needed to find an application that truly provides AI support but is not easily detected. After searching extensively, I saw that the reviews for the LinkJob AI app were quite good. After trying it out, I found that its screenshot analysis feature was genuinely helpful for solving CodeSignal problems. I did use this feature in my formal test and successfully passed it, which is why I'm willing to share my interview experience here

Goldman Sachs Software Engineer Online Assessment Overview

Test Format and Platforms

When I prepared for the Goldman Sachs Software Engineer Online Assessment, I first studied the structure of the entire recruitment process. The assessment forms a critical stage in the selection pipeline. The process includes several rounds, each with a distinct focus:

Stage | Description |

|---|---|

Initial Screen / HireVue | AI-conducted video interview assessing personality and behavioral traits. |

Online Assessment (OA) | 90-minute to 2-hour coding assessment focusing on Data Structures and Algorithms (DSA). |

Onsite Round / Superday | 2 to 5 hours of back-to-back interviews covering coding, system design, and behavioral discussions. |

Skillset & Team Fit Interview | Follow-up interview focusing on specific team needs and culture fit, if necessary. |

Final Technical Conversation | Concluding technical discussion covering the candidate's resume and technical background. |

Coding Question Types

The assessment includes a mix of coding and reasoning questions. I noticed that the coding section often features problems related to data structures, algorithms, and logical puzzles. The most common question types include:

Question Type | Example Question |

|---|---|

Puzzle | The famous Egg Dropping Puzzle. |

Array | Given an array of 100 integers in the range [1, 100], one integer is missing. Find the missing number. |

Frequency | Given an array of strings, find the string with 3rd highest frequency. |

Indices | Given an array of ‘n’ integers, your task is to print the indices of four integers (a, b, c, d) such that ‘a+b = c+d’. |

The difficulty level varies across sections. I observed that numerical calculations and reasoning questions tend to be more challenging, while abstract reasoning is relatively easier. Here is a summary of the difficulty distribution:

Category | Questions | Difficulty | Details |

|---|---|---|---|

Numerical Calculations | 8 | Hard | Includes probability, permutations, combinations, and basic math concepts. Time-constrained. |

Numerical Reasoning | 12 | Hard | Basic puzzle-type questions with a mathematical approach. |

Logical Reasoning | 12 | Medium | Basic pattern-type questions, including 2 on computer architecture. |

Abstract Reasoning | 12 | Medium | Mostly involves figuring out the next figure in a series. Easiest section. |

Diagrammatic Reasoning | 12 | Hard | Involves finding the value of '?' in given patterns. |

Verbal | 10 | Hard | Comprehension-based with general stories and related questions. |

The 2 Real OA Coding Questions I Got

Question 1 – Toy Distribution in a Circle (Math / Modulo)

What the problem looked like

Paraphrasing from memory:

There are n students sitting in a circle, numbered from 1 to n. A teacher starts from student k and distributes m toys, passing one toy at a time to each student in order around the circle (wrapping from n back to 1).

Each student can receive multiple toys.

Your task is to return the index of the first student who does not receive any toy. If every student receives at least one toy, return -1.

This is a close cousin of the classic “distributing m items in a circle of size n starting from the k-th position” question that people often mention in GS OAs.

Naive idea

Simulate the full distribution with an array count[n] and pass toys one by one (O(m)), then scan for the first zero. That’s fine for small constraints but will time-out if m is large (and in my test, it clearly could be).

Key insight

Think of the distribution in full rounds:

Each complete cycle around the circle gives one toy to everyone →

ntoys distributed.Number of full cycles:

full = m // nRemaining toys:

rem = m % n, starting from positionk.

So:

Every student initially has

fulltoys.Then we distribute

remextra toys sequentially: studentsk, k+1, …(wrapping around) get one more.

That means:

If

full == 0→ some students get 1 toy, the others 0; the first student with 0 is simply the first index that never appears in thoserempositions.If

full >= 1:If

rem == 0: everyone has at least one toy → answer =-1.If

rem > 0: actually everyone still has ≥1 toy (becausefullalready gave one to each), so again answer =-1.

In the variant I got, the constraints were such that m could be less than n, so the interesting case is full == 0. Then the students who get toys are:

k, k+1, ..., k+rem-1 (mod n, 1-based)

So the first student with 0 toys is simply the first index not in that segment. It becomes a clean modular arithmetic problem instead of heavy simulation.

Complexity

Time: O(1) (constant math)

Space: O(1)

What helped

I had practiced a few “circular distribution / Josephus / modular index” problems before, so spotting the pattern was quick. The main risk is over-engineering with simulation and running out of time.

This question is indeed a bit difficult. So I utilised the linkjob.ai tool, which remains invisible on screen and thus evades detection by interview platforms.

Question 2 – Decode Ways (Classic DP)

The second coding question was essentially the classic “Decode Ways” problem (LeetCode 91 style):

Given a string of digits

s, where'1' -> 'A','2' -> 'B', …'26' -> 'Z', return the number of ways to decodes. Leading zeros or invalid two-digit combinations are not allowed.

DP formulation

Let dp[i] be the number of ways to decode the prefix s[:i] (first i characters).

Transition:

Single-digit decode (last one digit):

If

s[i-1]is between'1'and'9', then we can decode it alone:dp[i] += dp[i-1]

Two-digit decode (last two digits):

If

s[i-2:i]forms a valid number in[10, 26], then:dp[i] += dp[i-2]

Base cases:

dp[0] = 1(empty string)dp[1] = 1if first char is non-zero, else 0

The answer is dp[n].

Complexity

Time: O(n)

Space: O(1) if you just keep the last two states.

I wrote it in Python, with careful handling of cases like "0", "06", and long strings. Once the transitions are clear, it’s a very fast implementation.

How I Prepared for the OA (What Actually Worked)

My prep strategy was to over-prepare slightly above OA level so the first round felt manageable and I still had gas left for later interviews. I focused on three pillars:

1. Core DSA “Drill Problems”

I treated certain LeetCode-style questions as must-have patterns. Three of them actually showed up later in my onsite rounds:

Stock Price Analysis / Best Time to Buy and Sell Stock (once)

Keep

min_priceso far and updatemax_profitasprice - min_price.Time O(n), space O(1).

Valid Parentheses with Multiple Types

Classic stack: push left brackets, pop on right brackets and check matching.

Time O(n), space O(n).

Longest Palindromic Substring

Center expansion: treat each index (and gap between indices) as a center and expand outward while characters match.

Time O(n²), space O(1).

I literally wrote them up in a mini “cheat booklet” (which I didn’t use during the test of course) with:

Problem statement

Short Chinese explanation of the idea

Time/space complexity

Clean Python implementation

That process itself was how I internalised the patterns.

2. Up-levelling to harder DP & string questions

Because Goldman is known to like DP and matrix DP questions such as Distinct Subsequences and Maximal Square , I made sure to:

Practise subsequence counting / inclusion DP

Practise 2D DP on grids (squares, paths, areas)

This paid off directly in my later rounds (more on that below).

3. Simulating the OA environment

Using public HackerRank & LeetCode contests plus timing myself for 90-minute 2-question sessions was crucial. I’d sit with:

Only one monitor

No phone nearby

A simple timer and a scratchpad

By the time I sat the real OA, that environment felt normal rather than stressful.

What Came After the OA: The Rest of the Goldman Sachs Process

Passing the OA moved me to multiple interview rounds over the next couple of weeks. Different offices / programs tweak the flow, but mine looked like this, which aligns well with other recent experiences.

Round 1 – DSA Focus (Distinct Subsequences + In-place Sort)

Format: 60 minutes, 2 questions

Distinct Subsequences (DP)

Same as the LeetCode classic: count ways

scan formt.They cared about both the O(n·m) DP and how to optimise space.

In-place Sorting Algorithm

Implement an in-place sort with no extra array allowed.

We discussed quicksort vs heapsort; I ended up implementing quicksort and carefully explained partitioning and worst-case behaviour.

Key learning: they were less obsessed with the final code and more with my step-by-step optimisation story—starting from brute force, improving complexity, and articulating time/space trade-offs.

Round 2 – DSA + Big Data Processing

Format: 60 minutes, again split into 2 halves.

Maximal Square (2D DP)

Given a binary matrix, compute area of the largest all-1s square.

I used the standard DP formula:

dp[i][j] = 0 if matrix[i][j] == 0 else 1 + min(top, left, top-left)Then took the maximum over all

dp[i][j].

External Sorting Question

“You have a 2GB file and only 250MB memory. How do you sort it?”

They wanted to hear external merge sort:

Split into chunks that fit in memory

Sort each chunk individually and spill to disk

Merge k sorted runs with a min-heap, considering disk access patterns

This round was clearly testing comfort with both algorithmic coding and scalability / memory constraints.

Round 3 – DSA + Low-Level Design

First 30 minutes: Maze / Graph Question

Solve a maze shortest path (Maze II-style) using BFS and then discuss optimisations.

Focus: modelling state, visited set, time complexity, and how to handle weighted vs unweighted edges.

Second 30 minutes: Low-Level Design (Splitwise)

Design a simplified Splitwise system:

Classes for User, Expense, Group, Balance

How to store who owes whom

How to handle different split types (equal, exact, percentage)

No production-level code, but they wanted proper class diagrams, interfaces, relationships, and how I’d extend / scale it.

The interviewer really cared about a structured approach:

Clarify requirements

Identify core entities and flows

Sketch class design & interactions

Discuss scalability, edge cases, and potential refactors

Round 4 – System Design + Design Patterns

This was easily my most challenging round.

Deep dive into one of my projects:

How the architecture works end-to-end

Trade-offs behind tech choices

Then a discussion on design patterns and OOP principles:

They asked specifically about a builder pattern in a multi-threaded context.

I struggled here and had to honestly say “I don’t know” on some concurrency implications.

The main lesson: it’s not enough to just “know” patterns by name – you need to understand where and why you’d use them and be able to discuss concurrency, performance, and failure modes.

Round 5 – Behavioural + Hiring Manager

Last round was 30 minutes, mostly behavioural questions:

Times I showed leadership

A conflict within a team and how I resolved it

A failure or bug I introduced and what I learned

This round is very much STAR-format storytelling and culture fit. They looked for:

Clear communication

Ownership mindset

Ability to reflect on mistakes and learn

No trick questions here, but you do need prepared stories mapped to common themes: ownership, teamwork, learning, resilience.

How LinkjobAI Gave Me a Real Advantage in Interviews

One tool that made a measurable difference for me during these interviews was LinkjobAI.

During live case and technical interviews, LinkjobAI listened invisibly in the background and helped me in three critical ways:

It captured complex case prompts accurately, so I didn’t miss key constraints or numbers.

It helped me organize my thoughts into structured bullet points in real time.

It reinforced clear, client-ready language when I needed to summarize or conclude.

Because LinkjobAI runs as a 100% invisible desktop application, it worked seamlessly during Zoom and online interview setups without appearing in screen sharing or recordings. That allowed me to stay focused on eye contact, communication, and reasoning—rather than scrambling to remember every detail.

Instead of replacing my thinking, it supported my structure, which is exactly what Goldman Sachs evaluates.

FAQ

How did I handle test anxiety during the assessment?

I practiced full-length mock tests before the real assessment. I focused on my breathing and reminded myself of my preparation. I kept a bottle of water nearby and took short pauses to reset my focus.

What should I do if I get stuck on a coding problem?

I move to the next question and return later. I write down the problem statement and break it into smaller parts. I test simple cases first. This approach helps me regain momentum.

Can I use external resources during the OA?

Whilst external software is indeed prohibited during online coding assessments, the Linkjob AI interview assistant is permitted for use. It proves exceptionally helpful and operates discreetly, swiftly providing reliable solutions.

How important is explaining my code in the OA?

I always explain my logic clearly in comments. This shows my understanding and helps reviewers follow my thought process. Clear explanations can set me apart from other candidates.

How should I use AI tools like Linkjob.ai?

You can simply use its screenshot analysis feature directly. The AI will provide the coding approach and a reference answer. The app is completely invisible/stealthy, so you can use it without worry.

See Also

My Journey to Success in the BCG X Assessment

Navigating the Databricks New Graduate Interview Journey

Successful Strategies for the TikTok CodeSignal Assessment

Mastering the Hudson River Trading CodeSignal Test

Essential Preparation Tips for Capital One Analyst Assessment