How I Navigated the 2026 OpenAI Interview Process: A Guide

The OpenAI interview process is relatively standardized, but each round is quite challenging. It typically includes a resume screening, technical phone interviews, an online assessment, and a virtual on-site interview. The entire process takes about 4-6 weeks, and many candidates are eliminated in each round. From what I've learned, the interview pass rate is around 5-10%.

A key characteristic of OpenAI's interviews is their strong emphasis on combining foundational theory with practical application. They don't just check if I can write code; they also look at the depth of my understanding of machine learning, my grasp of cutting-edge technologies, and whether I have an engineering mindset and research-oriented thinking.

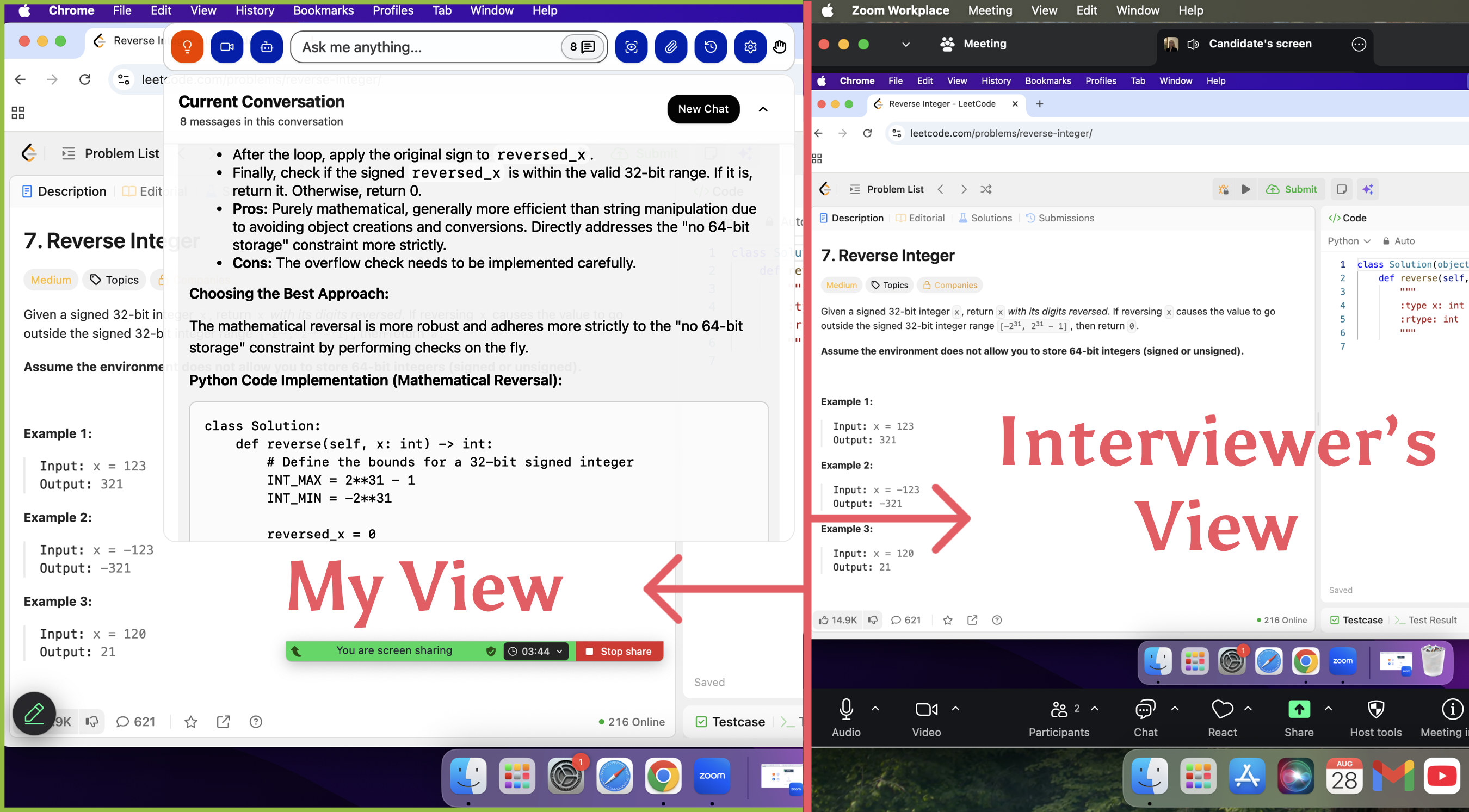

I’m really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my interview process and questions here. Having an invisible AI interview assistant during the interview indeed provides a significant edge.

OpenAI Interview Stages and Actual Questions

Recruiter Call

There isn't much to say about the HR call. Like most others, this step was about understanding my basic background and cultural fit. The interviewer just asked me why I chose OpenAI and a few questions related to my resume, and that was it. The content was very routine.

I talked about my background, my passion for AI, and my reasons for wanting to join OpenAI. I particularly emphasized my experience in machine learning and my interest in responsible AI development.

Technical Screening

Coding Challenge

The problem was to build a model training pipeline, with a key focus on handling streaming data. The requirements included supporting checkpointing and resuming, concurrent processing with multiple workers, as well as considering exception logging and data consistency.

After I finished, the interviewer followed up with questions about how to design checkpoints to avoid partial failures and how to ensure no information is lost during log aggregation. So getting the code to run is the bare minimum, the real assessment is on code robustness and structure.

Infra System Design

The problem was to "Design a distributed training platform for foundation models." I needed to consider sharded training, logging, fault tolerance, and model and data versioning. This meant I had to think from the perspective of large-scale models, not just small engineering modules. In this round, the interviewer repeatedly asked me, "If you had to scale to 2000 GPUs, how would you partition the parameters?" and "If a task fails, how would you automatically recover?"

Linkjob AI worked great and I got through my interview without a hitch. It's undetectable, and the interviewer did not find that I used it.

Virtual Onsite

Coding+Debugging

The first round was to build an Async training job manager. I was asked to implement an asynchronous training job scheduler that supported job prioritization and resource quotas, and could handle OOM (Out-of-Memory) errors by automatically rolling back. The interviewer even changed the interface on the spot to see if I could debug the issue. Midway through, he added a constraint: "If a task hangs for a long time, how does your scheduler prevent resource starvation?"

The second round was an embedding service API. I had to design a service that could quickly return text embeddings. The key requirements were to support hot updates and A/B testing. In addition to writing the API, the interviewer dug into my cache design, asking how I would achieve zero downtime with hot updates and how I would control tail latency during a surge in QPS.

Overall, these two rounds really tested my practical engineering implementation and design trade-off skills.

Project Deep Dive

In this round, the interviewer asked me to talk about an infra + model project I had worked on. As we talked, they started asking me questions about scalability, monitoring, failover, and maintainability. They also dug deeper into my design choices, monitoring methods, and maintainability. What I remember most is that the interviewer was asking "why" almost the entire time. For example, when I talked about using a certain distributed training framework, he pressed me on whether the checkpointing mechanism could guarantee strong consistency and how metric monitoring could quickly pinpoint a failed node.

I personally feel that OpenAI doesn't really care about whether I can fine-tune a model. They're more interested in how I scale training, deploy services, and debug problems. Many of the questions were more infrastructure-focused, but I had to explain my design choices from an ML perspective. The atmosphere wasn't as tense as I'd imagined. If I got stuck, I could discuss the trade-offs with the interviewer. The key is to show that I'm reliable, my systems are stable, and my designs are sound.

OpenAI Interview Questions and Sample Answers

When I was preparing for my interview, I looked at a lot of real interview questions shared on various platforms. Here, I’m sharing a collection of different question types that I compiled, along with my thought process for solving them.

Coding

These questions test your ability to write performant, debuggable code under real-world constraints. In an OpenAI ML coding interview, you may need to optimize vector operations or build models from scratch. You'll also face debugging scenarios that reflect challenges from OpenAI's deployment environments, making clarity and iteration essential.

Implement a transformer attention mechanism from scratch.

The requirement is to implement the transformer attention mechanism from scratch. You cannot use any existing libraries, only basic numpy or PyTorch tensor operations.

This question tests a deep understanding of the attention mechanism. You need to know the calculation process for the query, key, and value; the principle of scaled dot-product attention; and the implementation details of multi-head attention. An analysis of time and space complexity is also required.

Sample Answer: My approach would be to first implement a scaled dot-product attention function. This function would take the query, key, and value tensors as input. I'd calculate the dot product of Q and K transposed, then scale the result by the square root of the key dimension to prevent vanishing gradients. Next, I'd apply a softmax function to get the attention weights. Finally, I'd multiply the weights by the value tensor.

To implement the full multi-head attention mechanism, I would first split the input query, key, and value tensors into multiple "heads." I would then apply the scaled dot-product attention function to each head in parallel. Finally, I would concatenate the output of all heads and pass it through a final linear layer. I'd also be prepared to discuss the time and space complexity, which is typically O(N² * d) where N is the sequence length and d is the model dimension.

Explain the mathematical foundation of backpropagation and implement it.

You must start with the mathematical principles of the backpropagation algorithm, and then manually implement the backpropagation process for a simple neural network.

This question seems basic, but many people make mistakes in the mathematical derivation. You need to clearly explain the chain rule, the gradient calculation process, and how to update parameters.

Sample Answer: Backpropagation is an algorithm that uses the chain rule to efficiently calculate the gradients of the loss function with respect to a neural network's weights. The core idea is to propagate the error signal backward from the output layer to the input layer.

My implementation would involve two main steps. First, the forward pass: I'd calculate the output of the network for a given input, storing intermediate activations and weights. Second, the backward pass: I'd start by computing the gradient of the loss with respect to the output. Then, using the chain rule, I'd iteratively compute the gradients for each layer by multiplying the upstream gradient by the derivative of the local activation function. Finally, I would use these gradients to update the network's weights using an optimization algorithm like stochastic gradient descent.

Implement efficient inference for a large model.

Given a large model, you are required to implement an efficient inference system. You need to consider issues such as memory usage, computation speed, and batch processing.

This question assesses your understanding of model deployment and optimization. You need to know how to perform model quantization, pruning, and knowledge distillation.

Sample Answer: For a large model, the key to efficient inference lies in optimizing for memory and speed. My approach would focus on several techniques. First, I would use quantization to reduce the model's memory footprint and speed up computation by representing weights with fewer bits. For example, moving from 32-bit floating point to 8-bit integers. Second, I would use pruning to remove unnecessary weights or neurons that don’t contribute much to the model's performance, which further reduces its size and complexity. Third, I would implement batching, grouping multiple inference requests together to take advantage of parallel processing on a GPU. Additionally, I would consider leveraging specialized hardware and optimized libraries to further accelerate the computations.

System Design

System design interviews evaluate how you architect scalable, secure, and production-ready machine learning systems. Weather you're asked to build a real-time feature store or propose a red-teaming pipeline, these problems often resemble OpenAI ML debugging interview contexts, especially around deployment, reliability, and safety.

Design a CI/CD job scheduler

Design a CI/CD job scheduler that utilizes technologies such as Kubernetes and Docker. Discuss how you would approach the design and the key components involved.

Sample Answer: My design would be built around a decoupled architecture. The core components would be a Job Queue, a Scheduler, and a Worker Pool. When a user (or a Git webhook) triggers a job, a request is placed in the Job Queue. The Scheduler constantly monitors the queue and, based on job priority and resource availability, dispatches tasks to the Worker Pool. The Worker Pool would consist of Docker containers running on a Kubernetes cluster to ensure each job is isolated and scalable. I would use a database to store job state, logs, and artifacts. This design ensures that the system is scalable and fault-tolerant.

Design a KVStore Class

Design a KVStore class with the ability to set and get values. The class should also be able to serialize and deserialize its state to and from the file system. The keys and values are strings that may contain any character. Focus on the serialization and deserialization aspect of the class.

Sample Answer: To design a KVStore class with serialization capabilities, I'd use a dictionary to store the key-value pairs in memory. The set and get methods would simply interact with this dictionary. For serialization and deserialization, I would implement two dedicated methods: save_to_file and load_from_file. The save_to_file method would convert the in-memory dictionary into a JSON string and write it to the file system, handling potential I/O errors. The load_from_file method would read the JSON string from the file, parse it back into a dictionary, and populate the class's state. Using JSON would ensure that the data is easily readable and interoperable.

Design a Multi-Tenant CI/CD Workflow System

Design a system for a multi-tenant CI/CD workflow triggered by a git push. The system should analyze the git push to obtain the GitHub repository ID using an internal API service. The workflow configuration is stored in the GitHub repository. After the workflow is created, it should schedule a sequence of jobs defined in the config file. Additionally, design a front-end UI to display the progress of the workflow after it has been created.

Sample Answer: I'd design this system with a clear separation of concerns to handle multiple tenants. The architecture would include a Webhook Listener as the entry point, an API Service for internal communication, a Workflow Engine to manage jobs, and a Job Scheduler for execution. The Webhook Listener would receive Git push events and validate the tenant. The API service would be responsible for fetching the workflow configuration from the GitHub repository, ensuring each tenant can only access their own files. The Workflow Engine would then parse the configuration and instruct the Job Scheduler to run the sequence of jobs. To manage state and tenant separation, a database would be used to store workflow configurations and execution progress. A front-end UI would query the database to display the workflow's status to the user.

Behavioral Questions

Behavioral questions focus on how you think, collaborate, and uphold OpenAI's values. You'll be asked how you navigate ambiguity, communicate complex ideas, and contribute to responsible AI. These questions are designed to assess your alignment with OpenAI's mission and your ability to thrive in its fast-paced, impact-driven culture.

How would you convey insights and the methods you use to a non-technical audience?

Sample Answer: At my previous company, my team built a machine learning model to predict customer churn. My task was to present the model's findings to the marketing and sales teams, who had no background in data science. Instead of explaining the model’s architecture, I focused on the business impact. I created a simple dashboard that visually showed the top five factors contributing to churn and gave a clear, actionable list of the customers at highest risk. As a result, the marketing team was able to launch a targeted retention campaign, which reduced churn in that segment by 15% over the next quarter.

Describe an analytics experiment that you designed. How were you able to measure success?

Sample Answer: On my last project, we were developing a new recommendation feature for our e-commerce platform. I designed an A/B test to see if the new algorithm would increase click-through rates. I defined my hypothesis and then collaborated with the product team to establish a clear success metric: the click-through rate of the recommendation carousel within the first 24 hours of a user's session. We split our user base into two groups, with one receiving the old recommendations and the other receiving the new ones. After running the test for two weeks, I performed a statistical analysis which showed a statistically significant increase in clicks for the new algorithm. This result gave us the confidence to launch the feature to all users.

OpenAI Interview Answering Strategies and Tips

Key Assessment Points

When I went through the openai interview process, I noticed that the interviewers cared about more than just the right answer. They wanted to see how I thought about problems and how I explained my ideas.

I always tried to break down my answers like this:

State the problem clearly.

Explain my approach and why I chose it.

Describe how I executed my solution.

Share the result and what I learned.

This structure helped me stay organized and made my answers easy to follow.

Interview Preparation Platforms

Leetcode

Leetcode was my go-to for coding practice. I solved problems every day, focusing on medium and hard levels. But I learned not to spend all my time there. Some candidates make the mistake of only doing Leetcode and forget to practice system design or behavioral questions.

Note: Balance your prep. Practice coding, but also review system design, AI basics, and behavioral stories.

FAQ

What coding language did you use during your OpenAI interview?

I used Python for all my coding rounds. The interviewers said I could choose any language, but Python felt easiest for me. I recommend picking the language you know best.

How did you prepare for system design questions?

I practiced by sketching diagrams and breaking big problems into smaller parts. I reviewed real-world systems like chat apps and AI model deployments. I also asked friends to give me mock design prompts.

What was the hardest question you faced?

The toughest one asked me to design a scalable chatbot API for millions of users. I had to think fast and explain my choices. I drew diagrams and talked about handling latency and failures.

See Also

How to Cheat HackerRank Tests With AI: My 2026 Update

My Secret Checklist to Prepare for the OpenAI Coding Interview in 2026