My Real Prompt Engineering Interview Process and Top 25 Questions and Answers

I still remember the nerves I felt before my big interview in 2025. The demand for prompt engineering had exploded—companies like OpenAI and Google were hiring, and salaries started at $80,000. I used real Prompt engineering Interview questions and practiced nonstop. Each question made me more confident. Interviews felt tough because they tested both my skills and my ability to think on the spot.

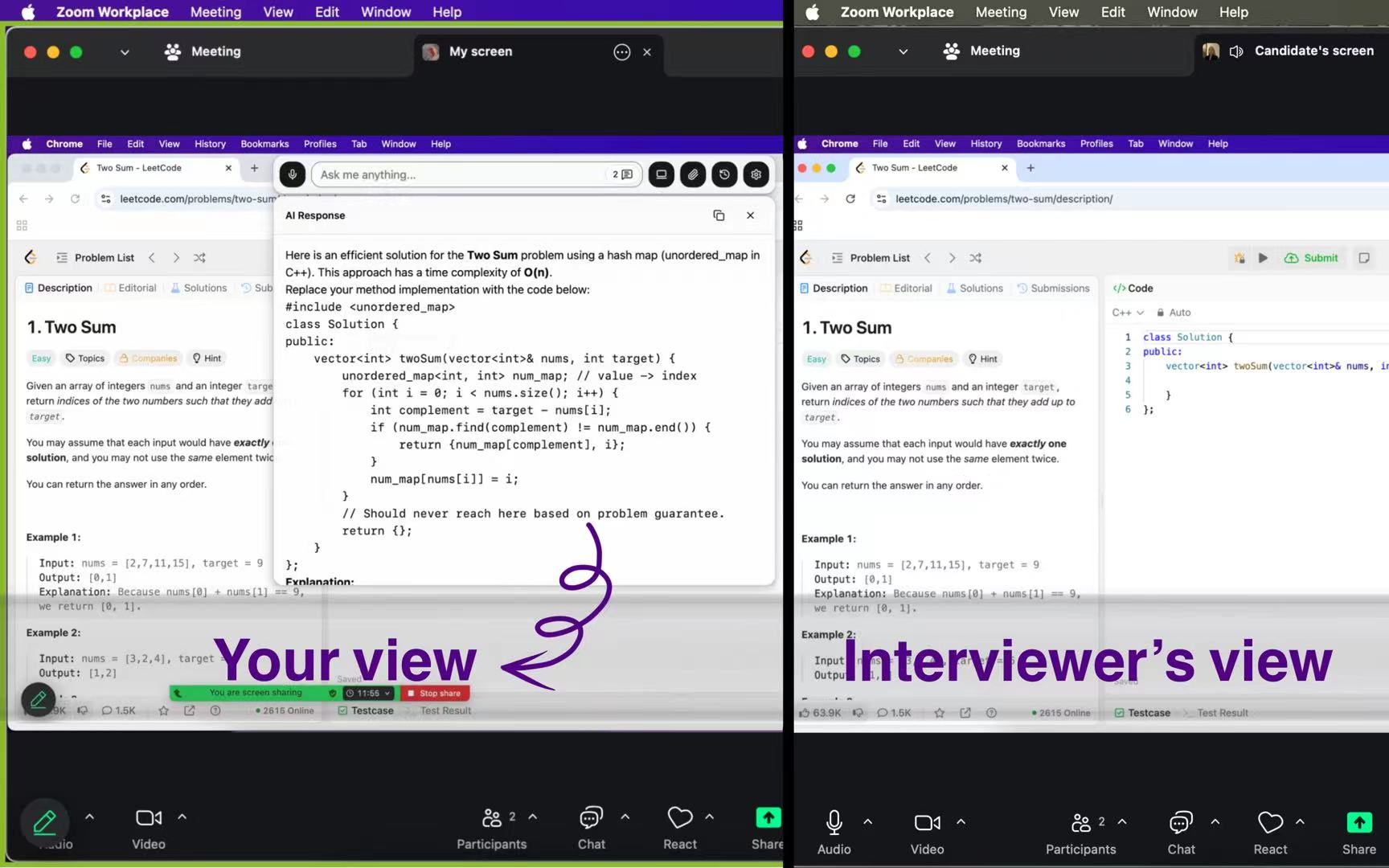

I’m really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my interview questions and experience here. Having an undetectable AI interview assistant indeed provides a significant edge.

Introduction

Why Prompt Engineering Is in High Demand in 2025

Prompt engineering has become one of the hottest skills in tech. I see companies everywhere looking for people who can shape how AI responds. Here’s why the demand is so high:

Almost every customer interaction now involves AI, so good prompts are key for accuracy and brand voice.

According to a report on Prompt Engineering job trends in 2025, job postings for prompt engineers have jumped by over 400% since 2023.

Certified prompt engineers earn about 27% more than similar roles.

Many businesses and universities now offer prompt engineering training.

The field has grown into areas like no-code tools and multimodal prompts (text, images, audio).

Industries like tech, healthcare, finance, education, and eCommerce all need prompt engineers.

Specialized frameworks help meet strict rules in finance, healthcare, and legal fields.

Teams using structured prompt engineering see higher customer satisfaction.

My 2025 Prompt Engineering Interview Process

My interview journey felt like a marathon. I started with an application and a portfolio. Next, I took an online quiz. Then, I had a technical interview where I answered prompt engineering Interview questions and solved real-world problems. I completed a small project to show my skills. Finally, I joined a behavioral interview to see if I fit the team. Each step tested my ability to design prompts, explain my choices, and work under pressure.

What Interviewers Are Looking For in Prompt Engineers

Interviewers want more than just technical skills. They look for:

Programming skills, especially in Python

Knowledge of AI and NLP

Data analysis abilities

Creative thinking and problem-solving

Strong communication and teamwork

Adaptability and willingness to learn

They also want to see how I improve prompts, analyze AI responses, and explain my process. Real examples and clear thinking matter most.

How This Guide Will Help You Prepare

This guide gives you the exact Prompt engineering Interview questions and sample answers I used. You’ll find practical tips and real examples. To practice, I used Linkjob. It offers AI-powered mock interviews that adapt to your answers and give instant feedback. During real interviews, Linkjob’s Real-Time AI Assistant listens, transcribes questions, and suggests smart answers. This helped me stay calm and sharp, even when questions got tough.

Top 25 Prompt Engineering Interview Questions and Answers

Basics of Prompt Engineering

What is prompt engineering, and why is it important?

Prompt engineering is the process of designing and refining the instructions given to large language models (LLMs) to get accurate and useful outputs. I see it as the bridge between human intent and AI action. It matters because well-crafted prompts help AI understand tasks, reduce errors, and deliver results that match business needs. In my experience, prompt engineering boosts efficiency, improves user satisfaction, and ensures AI systems act safely and ethically.

How do LLMs interpret prompts?

LLMs interpret prompts by breaking down the input text into tokens and using their training to predict the next word or phrase. They rely on context, patterns, and probability to generate responses. I always remember that LLMs do not "understand" like humans. They follow the structure and clues in the prompt, so clear instructions and context are key for good results. As prompt engineering shapes the AI revolution, keeping this in mind is crucial.

What are zero-shot, one-shot, and few-shot prompting?

Zero-shot prompting means I ask the model to do a task without giving examples. One-shot prompting gives one example, and few-shot prompting provides a few examples to guide the model. I use zero-shot for simple tasks, one-shot when I want to show a format, and few-shot for complex tasks that need more context. These techniques help me control the model’s output and improve accuracy.

Prompt Design Techniques

How do you write an effective prompt?

I start by defining the task clearly. I break it into steps if needed. I use precise language and avoid ambiguity. I add examples or formatting instructions when necessary. I test the prompt, review the output, and refine it until I get consistent results. I also keep prompts short to stay within token limits and make sure the model focuses on what matters.

What is prompt chaining, and when should you use it?

Prompt chaining links several prompts together, where the output of one becomes the input for the next. I use it for complex tasks that need multiple steps, like summarizing a document and then generating questions about it. This method helps me manage large tasks, keep outputs organized, and improve overall accuracy.

How do you balance specificity and creativity in prompts?

I balance specificity and creativity by giving clear instructions but leaving room for the model to generate unique ideas. For example, I might say, "Write a creative story about teamwork in a tech company, using a friendly tone." This tells the model what I want but lets it be creative. I adjust the level of detail based on the task and test different versions to find the best balance.

Use Cases and Real-World Applications

Describe a project where prompt engineering played a key role.

I worked on a customer support chatbot for a fintech company. I used prompt engineering to design role-based prompts, structured formatting, and retrieval-augmented generation. This improved the chatbot’s accuracy and reduced response times. The project showed me how prompt engineering can boost customer satisfaction and make AI tools more reliable.

How would you optimize prompts for customer support bots?

I optimize prompts by using clear instructions, role-based context, and structured output formats. I include fallback responses for uncertain questions. I test prompts with real customer queries and refine them based on feedback. I also use retrieval-augmented generation to ground answers in up-to-date company knowledge, which helps reduce hallucinations.

What’s your approach to prompt tuning in production settings?

In production, I use a prompt stack: design, test, deploy, and monitor. I log outputs, collect user feedback, and analyze performance metrics. I iterate on prompts to fix issues and improve results. I also use version control and automated testing to keep track of changes and ensure reliability.

Evaluation and Optimization

How do you evaluate the effectiveness of a prompt?

I evaluate prompts by checking relevance, accuracy, consistency, efficiency, and readability. I use both manual review and automated metrics like BLEU or ROUGE. I also gather user feedback and compare outputs to benchmarks. If a prompt fails, I analyze the errors and adjust the design.

What metrics do you use for prompt performance?

I track perplexity, cross-entropy, token usage, resource utilization, and error rates. I also look at user satisfaction and response quality. No single metric tells the whole story, so I combine several to get a full picture of prompt performance.

How do you iterate on a poorly performing prompt?

I start by reviewing the outputs and identifying where the prompt fails. I adjust the instructions, add or remove examples, and test new versions. I use A/B testing to compare changes. I keep refining until the prompt meets my quality standards.

Troubleshooting and Limitations

What are common challenges in prompt engineering?

I often face unpredictability, scalability issues, bias, security concerns, and token limits. Small changes in prompts can cause big differences in outputs. It’s hard to keep results consistent and fair. I address these by testing thoroughly, using feedback loops, and applying best practices for safety and reproducibility.

How do you prevent hallucinations in LLM outputs?

I prevent hallucinations by designing structured outputs, enforcing strict rules, and grounding responses in reliable data. I use retrieval-augmented generation and the ICE method (Instructions, Constraints, Escalation). I also set up system-level defenses like content filters and regular evaluation with human review.

What’s your strategy for handling ambiguous prompts?

When I get an ambiguous prompt, I break it down into smaller tasks and add context. I clarify the goal and set constraints. I test different versions and ask for feedback. This helps me guide the model to produce clear and relevant answers.

Advanced Prompt Engineering

What is prompt injection, and how do you mitigate it?

Prompt injection happens when someone adds malicious instructions to a prompt, causing the model to act in unintended ways. I mitigate this by using prompt layering, segmentation, and strict privilege controls. I monitor inputs and outputs, use anomaly detection, and add human-in-the-loop checks for sensitive tasks.

How do you design prompts for multi-turn conversations?

For multi-turn conversations, I keep track of context and user history. I use memory modules or frameworks like LangChain to manage state. I design prompts that reference previous turns and clarify the user’s intent. This helps the model stay on topic and provide coherent responses.

What’s your experience with auto-evaluation using AI?

I use AI tools to automatically evaluate prompt outputs. These tools check for relevance, accuracy, and consistency. They help me scale testing and catch issues faster. I combine auto-evaluation with manual review to ensure high quality.

Tools and Frameworks

What tools do you use for prompt testing and iteration?

I use tools like LangChain, PromptLayer, and Agenta for prompt testing and version control. These platforms let me compare outputs across models, track changes, and collaborate with my team. They also support automated testing and analytics.

How do you integrate prompt design into ML pipelines?

I integrate prompt design by using prompts for data collection, cleaning, processing, and analysis. I automate prompt generation and refinement with machine learning algorithms. I set up feedback loops to keep prompts effective as data and user needs change.

What are the best practices for version control in prompt work?

I version everything that affects prompt output, including models and parameters. I use semantic versioning and document all changes. I store prompts in centralized repositories and use tools like LangChain or Latitude for collaboration and rollback. Regular reviews help me keep prompts consistent and reliable.

Behavioral & Scenario-Based Questions

Tell me about a time you failed at prompt design.

Once, I designed a prompt that worked well in testing but failed with real users. The model gave inconsistent answers because I hadn’t included enough examples. I learned to always test with real data and iterate based on feedback. Now, I involve users early and refine prompts until they work in production.

How do you explain prompt engineering to non-technical stakeholders?

I use simple language and relatable metaphors. I compare prompt engineering to giving clear instructions to a new employee. I share stories and focus on business value, like faster customer support or better product recommendations. I listen to their questions and adjust my explanation to match their background.

How do you stay current with LLM and prompting trends?

I read research papers, join online communities, and follow industry leaders. I experiment with new tools and techniques. I also attend webinars and workshops. Staying curious and connected helps me keep my skills sharp and my prompt engineering Interview questions up to date.

Why do you want to work in prompt engineering?

I love solving problems and working with cutting-edge AI. Prompt engineering lets me combine creativity, logic, and technology to make a real impact. I enjoy helping teams build smarter products and improve user experiences. The field is growing fast, and I want to be part of its future.

Interview Tips for Prompt Engineering Roles

Focus on Clarity and Precision in Your Answers

When I answer prompt engineering Interview questions, I always focus on being clear and precise. I break down tough tasks into simple steps. I use short, specific language so there’s no confusion. If a question seems complex, I ask for clarification or give an example to show what I mean. I also define any tricky terms. This helps the interviewer see that I can guide AI models with accuracy. I keep my answers short but detailed, and I review my responses to make sure they hit the mark.

Tip: I like to review my answers with a friend or peer. A fresh set of eyes often catches things I miss and helps me improve my clarity.

Show a Structured Approach to Prompt Design

I always use a framework when I design prompts. My favorite is the ICE method: Instruction, Context, and Examples. For example, I start by stating the task, then I add background details, and finally, I give sample inputs and outputs. Sometimes, I use the SPEAR or CRAFT frameworks if the task needs more structure. Using these methods shows the interviewer that I have a plan and can handle complex prompt design in a systematic way.

Framework | Core Components | Best For | Key Advantage |

|---|---|---|---|

ICE | Instruction, Context, Examples | Detailed prompt development | Simplifies complex tasks |

SPEAR | Start, Provide, Explain, Ask, Rinse & Repeat | Beginners & quick implementation | Easy-to-follow process |

Understand and Address Common Interview Challenges

I know that prompt engineering interviews can be tricky. Sometimes, prompts are vague or too broad. Other times, I have to manage a lot of information within token limits. To handle this, I break big problems into smaller parts. I use feedback from the interviewer to improve my answers. If I notice the model gives inconsistent results, I adjust my approach and try again. I also use templates and tools to keep my work organized and consistent.

I always make my prompts clear and detailed.

I test and refine my answers based on feedback.

I use collaboration tools to manage versions and track changes.

Leverage Smart Tools for Real-Time Interview Success

Practicing with AI-powered tools has changed how I prepare for interviews. Linkjob lets me do mock interviews that feel real. It listens to my answers and asks follow-up questions, just like a real interviewer. During live interviews, Linkjob’s Real-Time AI Assistant listens in, transcribes questions, and gives me smart answer suggestions based on my resume and the job description. This helps me stay calm and sharp, even if I get a tough or unexpected question. I’ve used Linkjob for both tech and finance interviews, and it always gives me the edge I need.

Conclude with the Right Mindset and Setup

I believe that the right mindset makes all the difference. I stay adaptable and keep learning about new AI trends. I focus on what users need and use data to improve my prompts. I work with my team and always check for fairness and clarity. Before any interview, I set up a quiet space, review common Prompt engineering Interview questions, and practice with Linkjob. This combination of preparation and real-time support helps me walk into every interview with confidence.

Getting ready for prompt engineering interviews takes more than just reading questions. I practice with real examples and the best and most powerful AI platforms for interview preparation and learn to adapt fast during interviews. This helps me stay calm and answer with confidence. I always remind myself that deep practice and smart tools make a big difference.

FAQ

How do I prepare for a prompt engineering interview?

I start by reviewing common prompt engineering questions. I practice answering out loud. I use real examples from my projects. I also run mock interviews with friends or AI tools. This helps me get comfortable and spot areas where I need to improve.

What should I do if I get a question I don’t know?

I stay calm and take a moment to think. I break the question into smaller parts. I share my thought process with the interviewer. If I don’t know the answer, I admit it and explain how I would find a solution. This shows honesty and problem-solving skills.

How can I show my prompt engineering skills without much work experience?

I build a portfolio with personal projects. I write blog posts or share prompt design examples online. I join open-source projects or competitions. I talk about my learning process and how I solve problems. This helps interviewers see my passion and growth.

What tools can help me practice and perform better in interviews?

I use Linkjob for both practice and real interviews. It gives me AI-powered mock interviews that adapt to my answers and provide instant feedback. During live interviews, Linkjob listens, transcribes questions, and suggests smart answers based on my resume and the job description. This real-time support keeps me sharp and confident.