My Top 20 PyTorch Interview Questions and Answers List for 2026

PyTorch keeps rising as the must-have skill for machine learning engineers, AI engineers, and data scientists. I see more companies shifting from old-school programming to AI-first tools like PyTorch, with job demand growing fast.

In this article, I have compiled the top 20 PyTorch interview questions from my past interview experiences, along with their answers.

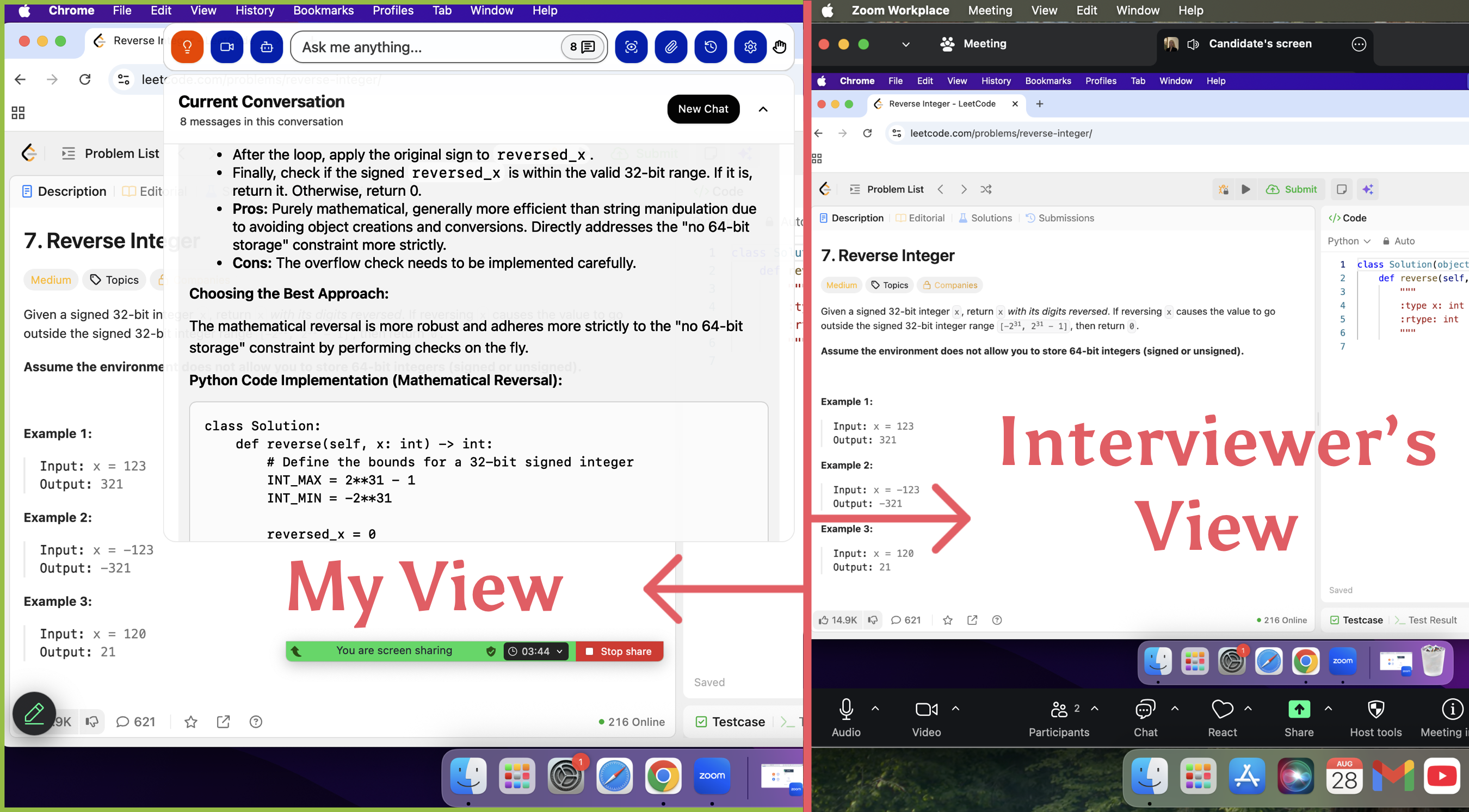

I am really grateful for the tool Linkjob.ai, and that's also why I'm sharing my interview experience and questions here. Having an undetectable AI interview assistant during the interview is indeed very convenient.

PyTorch Interview Questions

PyTorch Basics

When I prepare for PyTorch interview questions, I always start with the basics. Interviewers love to ask about the core ideas behind PyTorch. They want to know if you understand supervised and unsupervised learning, overfitting, underfitting, and feature engineering. These concepts show up in many interview questions. I also see questions about deep learning architectures like CNNs, RNNs, and Transformers.

Tensors in PyTorch

Tensors are the heart of PyTorch. Every PyTorch interview questions list includes questions about tensor types. I get questions like, “What is a tensor?” or “How do you manipulate tensors in torch?” I explain that a tensor is an n-dimensional array. Scalars are 0D tensors, vectors are 1D, matrices are 2D, and 3D tensors handle color images. Interviewers often ask about creating tensors, changing their shape, or using requires_grad for automatic gradient computation. I always practice these PyTorch interview questions with hands-on code.

import torch

x = torch.tensor([1.0, 2.0, 3.0], requires_grad=True)PyTorch vs TensorFlow

I often get PyTorch interview questions comparing PyTorch and TensorFlow. Here’s a quick table I use to answer these interview questions:

Aspect | TensorFlow | PyTorch |

|---|---|---|

Computation Graph | Static | Dynamic |

Ease of Use | Structured | Intuitive |

Deployment | Mature | Growing |

Community | Large | Research-focused |

I tell interviewers that PyTorch is great for research and prototyping because of its dynamic graph and pythonic API.

Autograd and Gradients

Autograd is a big topic in PyTorch interview questions. I get questions about how torch tracks operations and computes gradients. I explain that PyTorch builds a dynamic graph as you run code. When you call backward, PyTorch computes gradients for the tensor with requires_grad set to True. I also mention autograd.grad and autograd.backward for flexible gradient computation. These answers show I understand gradient computation for the tensor and how PyTorch supports creating neural networks.

Neural Network Modules

Finally, pytorch interview questions often focus on torch.nn modules. I get questions about building models with torch.nn.Module, using layers, and connecting them. I always practice creating neural networks in PyTorch and explaining my answers step by step. This helps me feel ready for any PyTorch interview questions that come my way.

Practical PyTorch Coding Questions

Implementing Layers

When I walk into a PyTorch interview, I always expect hands-on coding questions. Interviewers often ask me to build a simple feedforward neural network or a convolutional neural network (CNN) using PyTorch. Sometimes, they want to see if I can add dropout layers to prevent overfitting. I have also faced questions about creating generative adversarial networks (GANs) or writing a basic linear regression model with gradient descent. These questions help interviewers see if I understand PyTorch layers, model structure, and how to use torch modules in real code.

Write a feedforward neural network using torch.nn.Module.

Build a CNN for image classification with convolution and pooling layers.

Add dropout layers for regularization.

Create a GAN with generator and discriminator components.

Implement linear regression using PyTorch and gradient descent.

Custom Models

I often get pytorch questions about designing custom models, especially for tasks like object detection. I focus on modular code and clear logic. For example, I set up anchor generation by creating bounding boxes of different sizes and shapes. I adjust anchor parameters to match the dataset. I use Non-Maximum Suppression (NMS) to filter overlapping boxes and keep the best predictions. These steps show I can adapt PyTorch models to new datasets and tasks.

Set up anchor generation for object detection.

Customize anchor parameters for the dataset.

Use NMS to filter predictions.

Write modular PyTorch code for each step.

GPU Acceleration

Interviewers love to see if I know how to use gpu computations in pytorch. First, I check if a GPU is available with torch.cuda.is_available(). I set the device using torch.device("cuda" if torch.cuda.is_available() else "cpu"). I always move a tensor to gpu with .to(device). I batch data to save memory and use torch.cuda.empty_cache() to clear unused memory. I monitor GPU memory with torch.cuda.max_memory_allocated(). These steps help me train models faster and handle bigger datasets.

Tip: Always move both your model and data to the same device before training in PyTorch.

Saving and Loading Models

Saving and loading PyTorch models is a must-have skill. I save the model’s state_dict with torch.save() and load it with torch.load(). For large models, I use mmap=True in torch.load() to save RAM. I sometimes load weights one by one to avoid memory spikes. Before inference, I call model.eval() and use torch.no_grad() to save memory. If I need to optimize for CPUs, I try quantization or torch.jit for faster inference. These methods make my PyTorch code reliable and efficient.

Debugging

Debugging PyTorch code is a common interview topic. I use torch.autograd.detect_anomaly() to find gradient problems. I register forward hooks to check activations and output shapes. I visualize the computation graph with torchviz. I profile my code with the PyTorch Profiler to spot slow parts. I set random seeds for reproducibility. For distributed training, I enable detailed logging. I also plot loss curves with Matplotlib to see how training goes. If I see vanishing gradients, I switch activation functions or change weight initialization.

Deep Learning Interview Questions

Model Training

When I get deep learning interview questions about model training in pytorch, I always walk through the steps clearly. First, I define the loss function that fits the task. For example, I use CrossEntropyLoss for classification. Then, I set up the optimizer, like Adam or SGD. I enter the training loop, where I zero the gradients, run the forward pass, compute the loss, and backpropagate. After that, I update the weights. Sometimes, I use mixed precision training with torch.cuda.amp to save memory and speed things up. If I work with multiple GPUs, I use DistributedDataParallel. I also try memory tricks like gradient checkpointing. I always finish by validating the model and plotting the results. These steps come up in almost every pytorch interview.

Data Handling

Interviewers love to ask questions about data handling in PyTorch. I always mention how I create custom Dataset classes and use DataLoader to load data in batches. I set batch_size, shuffle, and num_workers to get the best performance. I make sure to handle device placement, switching between model.train() and model.eval() modes. I include validation steps in my training loop to track progress. I also adjust DataLoader settings for training or evaluation, depending on my hardware. These practices show I know how to use PyTorch as a deep learning framework.

Optimization

Optimization questions pop up a lot in deep learning interview questions. I talk about choosing the right optimizer, like Adam or AdamW, and tuning learning rates. I use learning rate schedulers to adjust the rate during training. Sometimes, I freeze layers when I fine-tune pre-trained models. I also use gradient accumulation and mixed precision to make training more efficient. These PyTorch tricks help me get better results and impress interviewers.

Overfitting Solutions

Overfitting is a classic topic in PyTorch interviews. I always mention using dropout layers to prevent neurons from relying on each other. I add L1 or L2 regularization to keep the model simple. I use data augmentation, like flipping or rotating images, to make the model generalize better. Early stopping is another favorite—I watch the validation loss and stop training if it stops improving. Cross-validation helps me check if the model works well on different data splits. These solutions show I understand how to handle overfitting in PyTorch.

Model Evaluation

When interviewers ask about model evaluation, I list the main metrics I use in PyTorch. For classification, I check accuracy, precision, recall, F1 score, and AUC. For regression, I use mean squared error, mean absolute error, and R2 score. I sometimes use TorchMetrics to make metric calculation easier and faster, especially on GPUs. For GANs, I mention FID and Inception Score. I also use confusion matrices and plot results to see how the model performs. These answers help me stand out in any pytorch interview.

Interview Tips and Pain Points

Preparation Strategies

When I get ready for a PyTorch interview, I always start with a plan. I review the basics, like tensors, autograd, and neural network modules. I write code by hand to answer common questions. I also practice explaining my thought process out loud. This helps me stay calm when I face tough interview questions. I break down big topics into smaller parts. For example, I focus on data handling one day and optimization the next. I use flashcards for PyTorch terms and quiz myself with real interview questions. I also read recent Pytorch updates, so I can answer questions about new features. This step-by-step approach helps me build confidence for my interview.

Real-Time Support

During a live interview, I know nerves can hit hard. Sometimes, I get stuck on a question or forget a key detail. That’s when real-time support makes a difference. I use Linkjob’s Real-Time AI Interview Assistant. It listens to the interview, recognizes questions, and gives me smart answer suggestions. If I face a tricky PyTorch coding problem or a deep learning case, Linkjob helps me stay focused. It transcribes the interview and highlights important Pytorch terms. This support lets me recover quickly and answer questions with clarity. I feel more relaxed knowing I have a tool that understands PyTorch and the interview process.

Mock Interviews

I always include mock interviews in my PyTorch interview prep. Practicing with friends or using AI-powered platforms like Linkjob helps me get used to real PyTorch interview questions. I learn to explain my PyTorch solutions step by step. This practice improves both my technical skills and my ability to communicate clearly. Linkjob’s mock interview feature adapts pytorch questions to my resume and the job description. It gives instant feedback and follow-up pytorch questions, just like a real interviewer. This makes me ready for any pytorch interview challenge.

PyTorch Interview Questions and Answers

Common PyTorch Interview Questions

What is the purpose of the nn.Module class in PyTorch, and how do you use it to create neural networks?

The nn.Module class is the base for all neural network modules in pytorch. I use it to organize layers, parameters, and the forward pass. I create a custom class that inherits from nn.Module and define the layers in the__init__method. The forward method describes how tensors move through the network.import torch.nn as nn class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.fc = nn.Linear(10, 5) def forward(self, x): return self.fc(x)Can you explain the difference between a DataLoader and a Dataset in PyTorch?

A Dataset defines how to access individual data samples. I use it to load images, text, or other data. The DataLoader wraps the Dataset and handles batching, shuffling, and parallel loading. This makes PyTorch training faster and more efficient.How do you implement custom loss functions in PyTorch? Can you provide a brief example?

I can write a custom loss function by subclassing nn.Module or by defining a simple function. For example, to create a mean absolute error loss:import torch def custom_mae_loss(output, target): return torch.mean(torch.abs(output - target))What techniques do you use to prevent overfitting when training models in PyTorch?

I use dropout layers, early stopping, data augmentation, and regularization. These methods help my model generalize better and avoid memorizing the training data.How do you save and load models in PyTorch? What are the best practices?

I save the model’s state_dict usingtorch.save(model.state_dict(), 'model.pth'). To load, I usemodel.load_state_dict(torch.load('model.pth')). I always save only the state_dict, not the full model, to keep things flexible.What are the main advantages of using GPU acceleration in PyTorch, and how do you enable it?

GPU acceleration speeds up tensor computations. I move my model and data to the GPU using.to('cuda')or.cuda(). This makes pytorch training much faster, especially with large datasets.Can you describe how to perform hyperparameter tuning in a PyTorch model?

I tune hyperparameters like learning rate, batch size, and optimizer settings. I use grid search or libraries like Optuna to automate this process. Good tuning can make a big difference in model performance.What steps do you take to debug a PyTorch model that is not converging?

I check data preprocessing, review the model architecture, monitor gradients, and simplify the model. Sometimes, I use torch.autograd tools to find issues with the computation graph.How do you visualize training progress in PyTorch?

I use TensorBoard, Matplotlib, or other logging tools to plot loss and accuracy over epochs. This helps me spot problems early and adjust my training process.

Advanced PyTorch Interview Questions

Explain key differences between PyTorch and TensorFlow, including attention mechanisms such as self-attention and cross-attention, and their applications in transformer networks.

PyTorch uses a dynamic computation graph, which makes debugging and prototyping easier. TensorFlow uses a static graph, which can be better for deployment. Attention mechanisms like self-attention let models focus on different parts of the input. Cross-attention helps models connect information from different sources. Transformers use these mechanisms for tasks like language modeling and image processing.Describe how to build Generative Adversarial Networks (GANs) in PyTorch, including the roles of generator and discriminator and adversarial training.

I build two models: a generator that creates fake data and a discriminator that tries to tell real from fake. During training, the generator learns to fool the discriminator, and the discriminator learns to spot fakes. This adversarial process helps both models improve.Discuss common challenges in deploying PyTorch models in production, such as model size, resource management, deployment platforms, monitoring, and security.

I often face issues with large model sizes and limited resources. I use quantization and pruning to shrink models. I choose deployment platforms like TorchServe or ONNX. I monitor models for drift and set up security to protect data and code.Explain optimization techniques for PyTorch models, including parameter pruning, quantization, GPU acceleration, batching, and hardware choices.

I prune unnecessary parameters, quantize weights to reduce memory, and use GPU acceleration for faster training. I batch data to make the most of hardware. Choosing the right hardware can also boost performance.Describe data parallelism strategies in PyTorch: data parallelism, model parallelism, distributed data parallelism, and pipeline parallelism.

Data parallelism splits data across multiple GPUs. Model parallelism splits the model itself. Distributed data parallelism lets me train on many machines. Pipeline parallelism breaks the model into stages for efficient processing.Explain gradient clipping and its importance in stabilizing training, especially for RNNs.

Gradient clipping limits the size of gradients during backpropagation. This prevents exploding gradients, which can destabilize training, especially in RNNs.Discuss implementing reinforcement learning algorithms using PyTorch, including environment setup, agent design, and training.

I set up the environment, design an agent with a policy or value network, and train using rewards. PyTorch makes it easy to build and update these networks with tensors.Describe early stopping to prevent overfitting by monitoring validation performance.

I watch the validation loss during training. If it stops improving, I stop training early to avoid overfitting.Explain learning rate decay methods and their benefits for model convergence.

I reduce the learning rate over time using schedulers. This helps the model converge smoothly and avoid overshooting minima.Discuss handling imbalanced datasets using data augmentation, cost-sensitive learning, class weights, ensemble methods, and oversampling techniques like SMOTE.

I use data augmentation to create more samples, adjust class weights in the loss function, or use oversampling methods like SMOTE. Ensemble methods can also help balance predictions.

FAQ

How do I get started with PyTorch if I am new to deep learning?

I always tell beginners to start with the official PyTorch tutorials. These guides walk you through basic concepts and show you how to build simple models. I found hands-on practice helps me learn faster.

Can I use PyTorch for both research and production?

Yes, I use PyTorch for research because it is flexible and easy to debug. When I want to move a model to production, I use tools like TorchScript or ONNX to make deployment smoother.

What should I do if my PyTorch model trains slowly?

I check if my code uses the GPU. I also try smaller batch sizes or simpler models. Sometimes, I use mixed precision training to speed things up. Profiling tools help me spot slow parts in my code.

Is it possible to use PyTorch with other Python libraries?

Absolutely! I often combine PyTorch with libraries like NumPy, Pandas, and Scikit-Learn. This lets me handle data, preprocess inputs, and evaluate models all in one workflow.

See Also

How I Used AI to Pass the Interview on Microsoft Teams

How I Passed the Microsoft HackerRank Test in 2025 on My First Try