My 2025 Airbnb HackerRank Experience and Real Questions

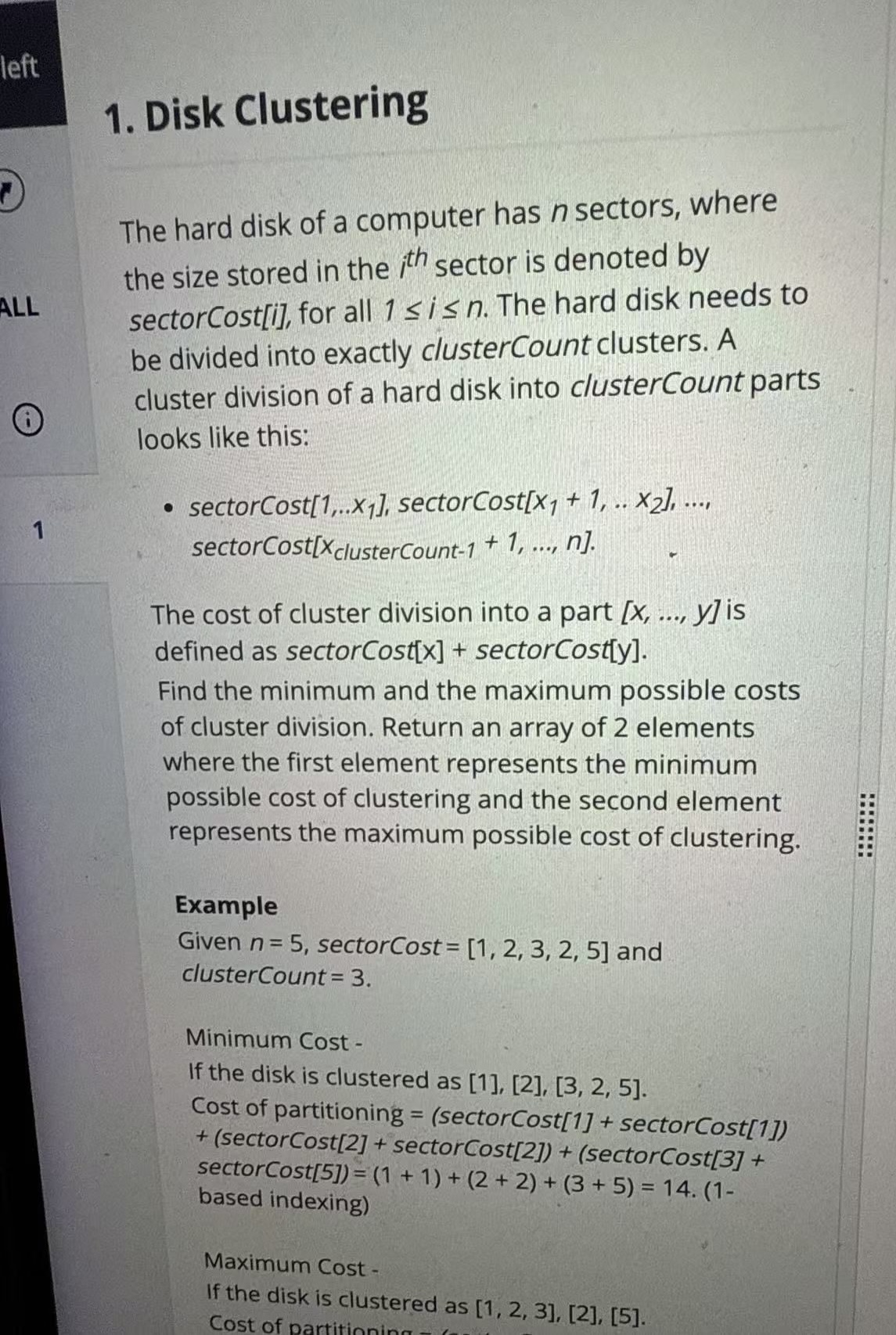

Airbnb’s entire OA consisted of only one question, which was about “Disk Clustering,” with a 60-minute time limit. Although it was just one question, I found the difficulty to be Medium-High. Solving it was somewhat challenging.

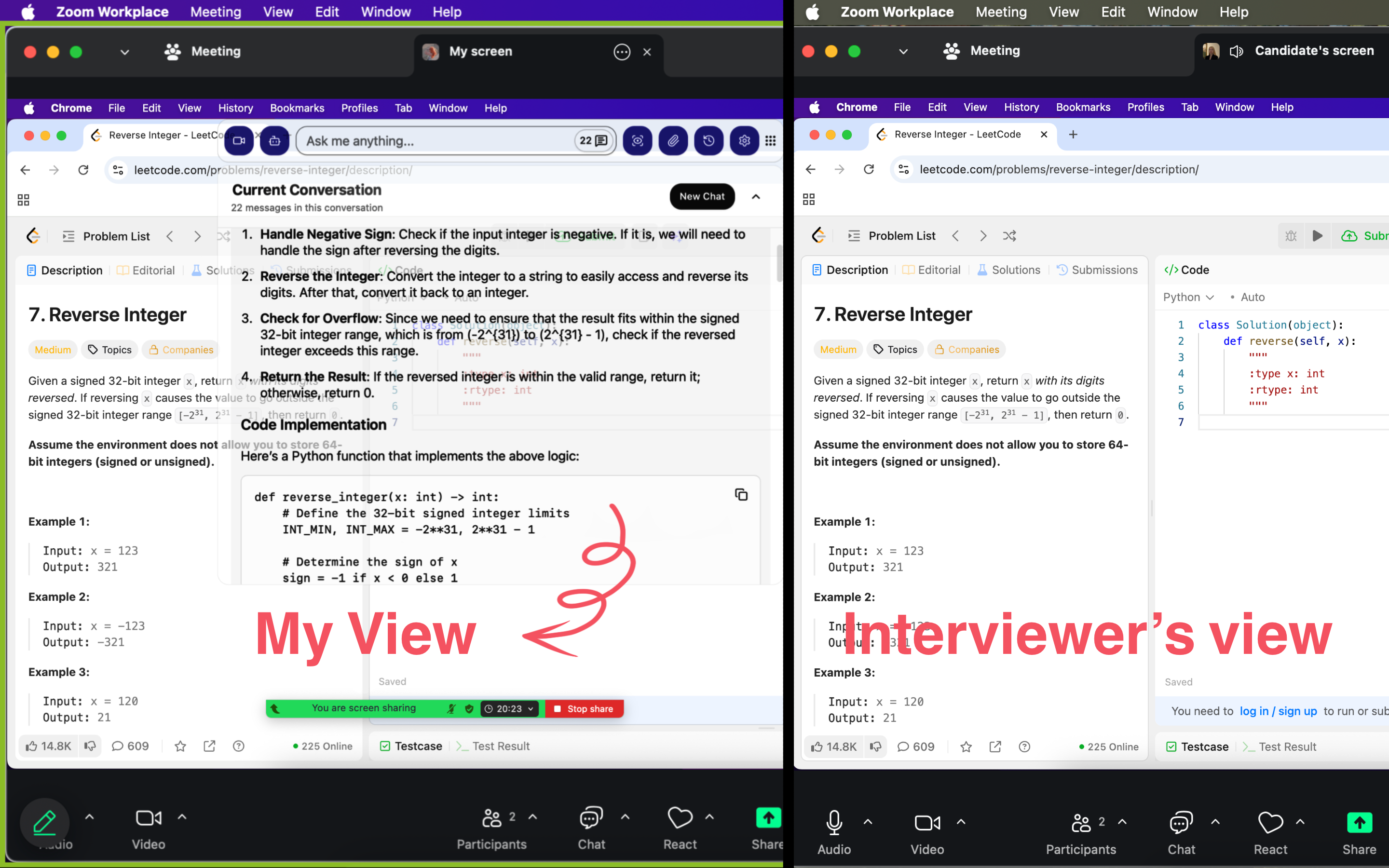

I’m really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my OA questions and experience here. Having an undetectable AI interview assistant for the HackerRank OA indeed provides a significant edge.

Airbnb HackerRank Question

Problem Understanding and Core Challenge

Data Definition

n: total number of disk sectors

sectorCost[i]: an array of costs for each sector

clusterCount (k): the number of clusters that must be formed

Cluster Cost Definition

The cost of a cluster [x, …, y] is defined as:

Cost(Cluster) = sectorCost[x] + sectorCost[y]

Core Challenge: Total Cost Structure

The total cost, TotalCost, has a fixed structure. If the array is split into k clusters, there are k - 1 internal split points pi.

The total cost can be expressed as:

TotalCost = sectorCost[1] + sectorCost[n] + Sum of (k - 1 split point costs)

Each internal split point pi contributes two cost terms: sectorCost[pi] (as the end of the previous cluster) and sectorCost[pi + 1] (as the start of the next cluster).

Thus, the problem reduces to:

Find k - 1 non-overlapping split positions pi that minimize (or maximize) the sum of adjacent costs at all split points, i.e., the sum of sectorCost[pi] + sectorCost[pi+1].

My Solution Approach: Simplify the Problem

Since choosing one split point does not affect the cost of another, the problem degenerates into a simple sorting problem.

Step 1: Construct the “Pair Cost” Array

I first calculated the sum of all adjacent sector pairs and stored them in a new array, pairCost.

pairCost[i] = sectorCost[i] + sectorCost[i+1]

The length of pairCost is n - 1. This array contains all possible split point costs. I only needed to select clusterCount - 1 values from this array.

Step 2: Minimum Cost

To minimize the total cost, I selected the smallest clusterCount - 1 values from pairCost.

Sort pairCost in ascending order.

Take the first clusterCount - 1 elements and sum them to get MinSum.

Final minimum cost:

Minimum Cost = sectorCost[1] + sectorCost[n] + MinSum

Step 3: Maximum Cost

To maximize the total cost, I selected the largest clusterCount - 1 values from pairCost.

Use the sorted pairCost array.

Take the last clusterCount - 1 elements and sum them to get MaxSum.

Final maximum cost:

Maximum Cost = sectorCost[1] + sectorCost[n] + MaxSum

Summary and Result

The key to this problem is proper mathematical modeling and simplification. It effectively becomes an O(n log n) sorting problem.

Finally, I returned an array containing both values: [Minimum Cost, Maximum Cost].

I have to say, Linkjob AI is really easy to use. I used it during the interview after testing its undetectable feature with a friend beforehand. With just a click of the screenshot button, the AI provided detailed solution frameworks and complete code answers for the coding problems on my screen. I’ve successfully passed the test, with the HackerRank platform not detecting me at all.

Overview of the Airbnb HackerRank Assessment

Assessment Format

Airbnb’s HackerRank online assessment is a timed individual coding test completed entirely on the HackerRank platform. There is no live interaction with an interviewer, and the task is finished independently.

The assessment focuses solely on coding and problem-solving, without multiple-choice questions or personality tests. After submission, the platform immediately displays the pass rate and performance. As long as the solution avoids issues such as timeouts or memory limits, it is considered valid.

Time Allocation Strategy

For the “disk sector clustering cost” problem, I divided the 60-minute time limit into four phases: understanding, deriving the logic, coding, and validating. This helped maintain clarity and accuracy throughout the process.

Phase 1: Understanding the Problem and Rules (15 minutes)

The first 10 minutes were spent reading the prompt carefully line by line, highlighting constraints, simplifying the scenario on scratch paper, and manually computing sample outputs. The next 5 minutes were used to confirm input and output formats to prevent rework.

Phase 2: Logical Derivation and Strategy Validation (20 minutes)

This phase was the most critical. I examined various clustering patterns, compared total costs, derived the core formula, and tested it against multiple edge cases to correct initial misconceptions. The analysis eventually led to the correct greedy strategy.

Phase 3: Coding and Syntax Checks (15 minutes)

I implemented the logic in Python, dedicating 10 minutes to the core solution and edge cases, followed by 5 minutes of syntax checks, naming review, and basic functionality validation.

Phase 4: Testing and Optimization (10 minutes)

I created typical cases, edge cases, large inputs, and special value scenarios to verify the output. Any issues identified during testing were corrected immediately to ensure all tests passed.

Key Evaluation Areas

The problem focuses on three abilities: problem decomposition, algorithmic thinking, and code robustness, which align with Airbnb’s expectations for engineering candidates.

Problem Decomposition and Abstraction

Although the prompt uses a disk sector context, the essence of the problem is interval partitioning and cost optimization. The task evaluates the ability to remove surface-level details, form a mathematical model, distinguish between fixed and variable cost components, and structure the logic clearly.

Greedy Logic and Derivation

Rather than requiring brute-force enumeration, the problem emphasizes recognizing patterns and deriving a valid greedy rule based on adjacent pair costs. Multiple examples must be used to verify the logic and ensure it remains correct in different scenarios.

Edge Case Handling and Code Reliability

Hidden test cases highlight the importance of handling scenarios such as a single cluster, clusters with only one sector, minimum input sizes, and unusual values. Anticipating such cases and writing code that accommodates them is essential for passing all tests.

Coding Efficiency and Clarity

Efficient data structures and clean implementation are valued. Using built-in functions such as list comprehensions and sorting helps maintain good performance and readability for large inputs.

Airbnb HackerRank Preparation Strategies

Strengthening Core Algorithms and Data Structure Foundations

First, greedy algorithms, especially problems related to interval partitioning, cost optimization, and extremum calculations. I spent time understanding the full “pattern finding, verification, and implementation” process, which helped me extract optimization ideas from concrete problem settings.

Second, arrays and string manipulation. I practiced sorting, slicing, summing, and other fundamental operations, and trained specifically on handling edge cases to stay calm when encountering irregular inputs.

Third, time-complexity optimization. I intentionally avoided brute-force thinking and prioritized efficient approaches, ensuring that my solutions could handle large input sizes. I paid particular attention to commonly used low-complexity techniques.

Targeted Practice

During the practice phase, I did not chase problem quantity. Instead, I selected problems aligned with Airbnb’s assessment style. I focused on medium-level LeetCode questions and practiced them by category.

I concentrated on greedy-algorithm topics to strengthen my ability to derive mathematical models from problem constraints.

I practiced problems involving continuous intervals to deepen my understanding of consecutive partitioning rules.

I also completed specialized training on edge cases, deliberately choosing problems with unusual inputs to improve code robustness.

For each problem, I derived the solution independently before checking any answers. After coding, I compared my approach with the optimal solution, summarizing where my reasoning stalled and what edge cases I had initially overlooked. This ensured that each problem helped me master an entire class of techniques.

Full Simulation of the Assessment Environment

Simulating the real test environment was a key part of my preparation, aimed at improving time management and maintaining logical rigor under pressure.

I used HackerRank mock tests or LeetCode timed contests, strictly setting a 60-minute limit to solve one or two medium-level algorithm problems. I followed the full real-assessment flow of reading, deriving logic, coding, and validating, and I allocated time according to the breakdown I had developed earlier.

After each simulation, I reviewed whether I finished within the allotted time, whether my reasoning took unnecessary detours, whether any hidden test cases failed due to missing edge-case handling, and whether performance met expectations.

Fine-Tuning and Habit Development

Given Airbnb’s emphasis on practical engineering quality, I practiced good coding habits throughout preparation.

I kept my code well-structured, with clear variable names and organized logic, and used concise syntax whenever possible to improve readability.

I strengthened my testing mindset: before coding, I listed possible test scenarios; after coding, I actively created diverse test cases instead of relying on platform tests to reveal issues.

I summarized common pitfalls into a personal checklist.

I also familiarized myself with the HackerRank editor, including shortcut keys and input-output rules, so no time would be wasted on tool-related problems during the real assessment.

Review and Error Consolidation

I placed significant importance on post-practice review and maintained a detailed error log. I categorized problems by type, cause of error, and correction strategy.

For problems where my reasoning stalled, I re-derived the core logic and summarized the transformation process from problem description to mathematical model.

For problems where edge-case handling failed, I extracted general techniques for managing similar boundary conditions.

For problems with inefficient solutions, I analyzed the key steps needed for complexity optimization.

I revisited my error log regularly to reinforce concepts, avoid repeating mistakes, and build reusable solution templates that improved both accuracy and speed.

FAQ

Does Airbnb’s HackerRank test require a webcam?

No, it does not require a webcam.

How did you handle implicit constraints that were not explicitly stated in the problem description?

I relied on the problem context and common patterns in programming assessments to make reasonable assumptions, and I added compatibility handling in the code accordingly. For example, even though the problem statement did not mention negative values, I still made sure the code could handle non-positive inputs and added comments to document these assumptions. In the actual assessment, this approach helped me pass hidden test cases and avoid losing points due to assumptions such as “all inputs are positive” or overlooking scenarios like n = clusterCount.

When there was spare time after coding, how did you use it effectively for self-checking?

I followed a three-step review process focused on scenario coverage, code robustness, and efficiency.

First, I quickly created 3–5 extreme test cases (including the smallest input, the largest input, and inputs with special values) to confirm that the outputs matched expectations.

Second, I reviewed implementation details: whether variable names were clear, whether there were any risks of index out-of-bounds errors, and whether boundary conditions were fully handled.

Finally, I rechecked the algorithm logic to see if there was a cleaner way to implement it, such as replacing manual loops with built-in functions or simplifying conditional statements. This not only improved readability but also reduced the chance of logical bugs.

See Also

How to Cheat HackerRank Tests With AI: My 2026 Update

How I Passed 2026 Microsoft HackerRank Test on My First Try

How I Passed 2026 JP Morgan HackerRank Assessment: Q&A

2026 Update: My Stripe HackerRank Online Assessment Questions

Questions I Encountered in 2026 Goldman Sachs HackerRank Test