I Compiled Anthropic Concurrency Interview Questions for 2026

Anthropic’s interviews focus heavily on concurrency because its products depend on high-performance, reliable systems. Model training involves distributed computation across massive datasets, and inference services must handle thousands of parallel requests with minimal latency.

During my Anthropic interview, I encountered several rounds where concurrency was a key theme. In this article, I’ve categorized some real interview questions into four areas to break down their solution approaches: Coding, System Design, Algorithms and Data Structures, and Fundamentals and Concepts. These questions come from my own interview experience and others’ shared experiences.

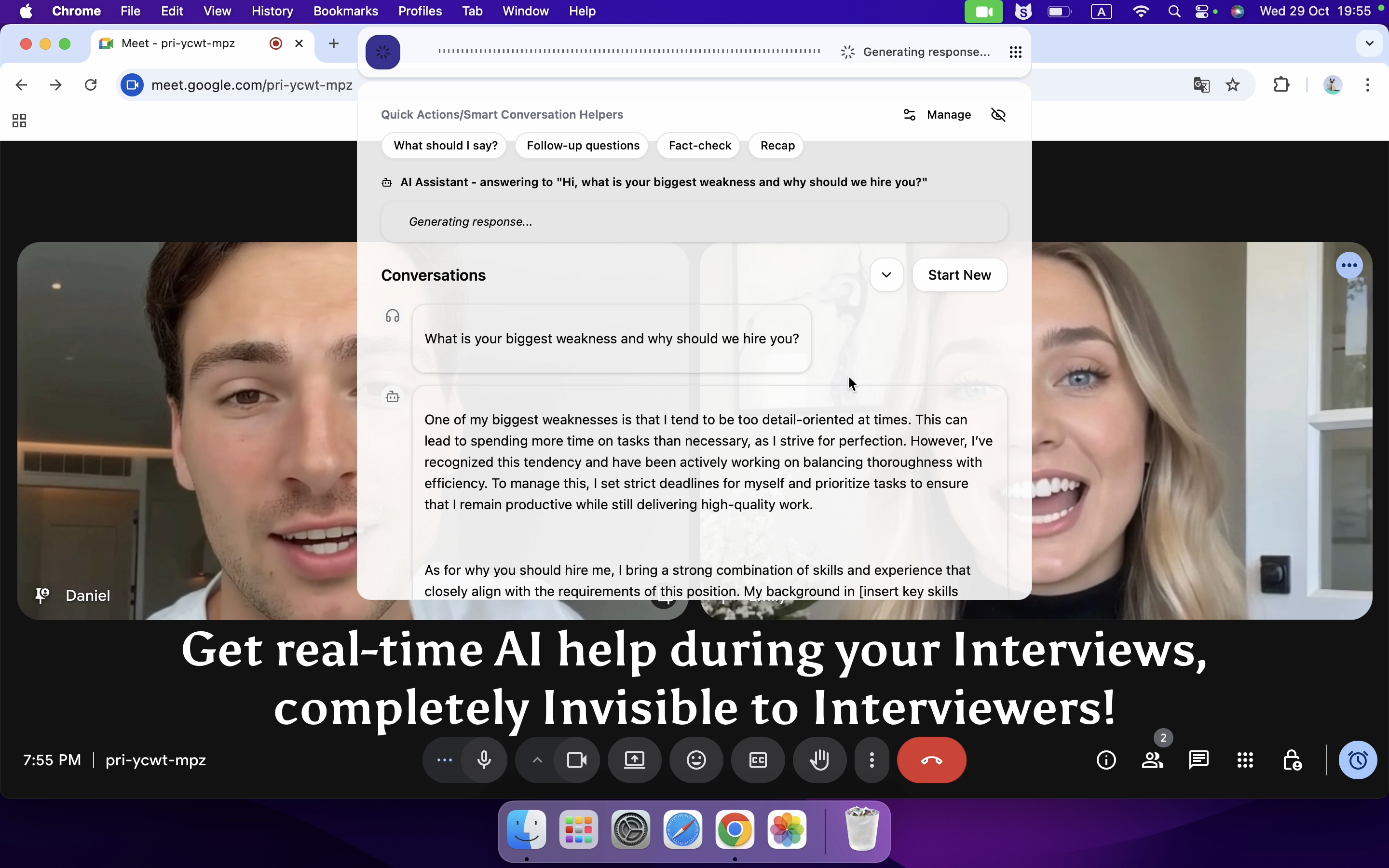

I’m really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my interview questions here. Having an undetectable AI interview assistant during the interview indeed provides a significant edge.

Anthropic Concurrency Interview Coding Questions

Web Crawler

Problem:

Implement a multithreaded web crawler using a threading pool that, given a root domain, crawls and collects all URLs under it. The task also requires writing the relevant code, explaining how asyncio can be used to improve the crawler’s efficiency, and analyzing the impact of Python’s GIL (Global Interpreter Lock) on memory contention between multiprocessing and multithreading in this scenario.

Concurrency Perspective:

The essence of this crawler problem is addressing efficiency and resource scheduling in high-concurrency web crawling. Using a threading pool for multithreaded crawling allows thread switching to increase concurrency in I/O-bound tasks. However, Python’s GIL restricts true parallel speedup for CPU-bound tasks in multithreading and may also introduce memory contention between threads, leading to higher context-switching overhead. Multiprocessing can bypass the GIL to achieve genuine parallelism, but it introduces extra costs from memory isolation and inter-process communication.

Asyncio’s coroutine-based asynchronous programming model enables efficient scheduling of a large number of I/O operations within a single thread through an event loop, reducing the overhead of thread and process switching. This approach is well-suited for I/O-intensive, high-concurrency crawling, greatly improving throughput while avoiding GIL-related memory contention.

The threading pool itself involves concurrency management. Properly configuring pool size and parameters allows efficient use of multithreading while preventing resource exhaustion caused by excessive thread creation, further optimizing the crawler’s concurrent performance.

Parallel Processing

Problem:

Design and implement a parallel word segmentation system to efficiently process large-scale datasets. The system should use multiprocessing or multithreading to split data blocks in parallel, include a mechanism for task distribution and result merging, and explain how to avoid resource contention, such as shared memory conflicts, between processes or threads to ensure accuracy and integrity of the segmentation results.

Concurrency Perspective:

The core of this system is the use of concurrent computation to overcome the performance bottleneck of single-threaded processing on massive datasets. Multiprocessing or multithreading enables parallel splitting of data blocks across multiple compute units, significantly increasing segmentation throughput. However, concurrency introduces challenges of resource contention. When processes or threads share data storage, read-write conflicts may occur. Synchronization mechanisms such as inter-process communication (queues), thread locks, or lock-free data structures are required to prevent inconsistent results.

A well-designed task distribution strategy, for instance evenly splitting data by volume, further prevents workload imbalance and ensures that no process or thread is overloaded while others remain idle. This approach enables more efficient concurrent processing and makes the system better suited for large-scale dataset requirements.

I also used LeetCode to practice concurrency interview questions, since some problems in my Anthropic interview were taken directly from LeetCode or were variations of them.

Anthropic Concurrency System Design Interview Questions

High-Concurrency Inference API Design

Problem: Design a large-scale distributed training system for LLMs (similar to Claude). The system should cover data parallelism, model parallelism, and fault tolerance. In line with Anthropic’s resource allocation strategies, explain how to ensure training consistency during fault recovery, and answer the question: “How would you optimize cross-node communication?” (Should reference specific approaches such as RDMA or gradient compression). Additionally, explain how this system would support a high-concurrency inference API, and how it would dynamically schedule GPU resources when traffic surges to guarantee API stability and inference efficiency.

Concurrency Perspective:

This problem focuses on end-to-end concurrency and distributed resource coordination, spanning both training and inference phases.

Training Phase:

Data parallelism enables multiple nodes to process partitioned training data concurrently, improving throughput, but requires concurrent gradient synchronization to avoid issues like stale or conflicting updates.

Model parallelism distributes large models across GPUs for concurrent computation, but requires careful coordination to prevent pipeline stalls.

Cross-node communication optimization (e.g., RDMA, gradient compression) addresses the network bottlenecks caused by frequent gradient/parameter exchanges, reducing latency and ensuring efficiency of distributed concurrent computation.

Fault tolerance and recovery are essential to preserve consistency during concurrent training, preventing single-node failures from disrupting the entire system.

Inference Phase:

A high-concurrency inference API must ensure availability and efficiency under massive concurrent requests.

Load balancing prevents overload on individual nodes.

Caching reduces redundant computations to boost throughput.

Message queues absorb traffic spikes and smooth out load fluctuations.

Dynamic GPU scheduling is critical under traffic surges: strategies such as resource pooling, priority-based scheduling, and elastic scaling help prevent GPUs from becoming bottlenecks, ensuring low latency and stable throughput in high-concurrency inference.

Linkjob AI worked great and I got through my interview without a hitch. It’s also undetectable, I used it and didn't trigger any HackerRank detection.

Distributed Search System

Problem: Design a distributed search system capable of handling billions of documents and millions of QPS. Address the following: how to avoid hot shards, how to ensure efficient cross-shard merge & sort, and how to monitor key metrics like query latency, error rate, and index freshness.

Concurrency Perspective:

At its core, this is about building an architecture for extreme concurrency. Millions of QPS means the system must handle massive concurrent queries.

Avoiding hot shards prevents single-node overload under high concurrency.

Efficient merge & sort across shards ensures consistency and scalability in concurrent data aggregation.

Monitoring latency, error rate, and freshness is vital for stability in high-concurrency environments.

Data Processing

Problem: Design a real-time search recommendation system that supports live query suggestions (triggered after typing 2–3 characters). Requirements include: millions of QPS, <50ms latency, and 99.9% availability. The system must support prefix matching and fuzzy matching (to handle typos), deliver personalized recommendations based on user history, and update trending queries in real time. Explain how to achieve concurrency safety and stability with distributed deployment.

Concurrency Perspective:

This problem centers on maintaining low latency and real-time performance under massive concurrency.

Edge caching (CDN) reduces backend pressure by serving frequent queries closer to users.

Concurrent-safe data structures (e.g., distributed tries) support multithreaded real-time reads/writes without corruption.

Sharded service deployment distributes query load across nodes, ensuring scalability to millions of QPS within <50ms latency.

Incremental updates to trending queries prevent full reindexing from blocking concurrent service.

Batch Processing Service

Problem: Design a batch processing service for large-scale workloads (e.g., preprocessing data for LLM training). Requirements include task prioritization, concurrent scheduling, and resource isolation. Explain how to prevent high-priority tasks from being blocked by low-priority ones, and how containerization (e.g., Kubernetes) can support concurrent deployment and elastic resource scaling, ensuring multiple batch tasks run efficiently without resource contention.

Concurrency Perspective:

The focus here is concurrent scheduling, resource fairness, and throughput.

Task prioritization ensures urgent tasks (e.g., preprocessing for imminent training runs) aren’t blocked by lower-priority jobs.

Containerization (K8s) allows safe concurrent deployment with resource quotas that prevent one job from monopolizing GPU/CPU/memory.

Elastic scaling adjusts resources dynamically: auto-expansion during peak demand improves concurrent throughput, while contraction during idle times saves cost, striking a balance between concurrency efficiency and utilization.

Anthropic Concurrency Algorithms and Data Structures Interview Questions

Top K Frequent Queries from Data Stream

Problem: Design an algorithm to continuously track the top K most frequent queries from an incoming data stream. The solution must remain efficient in both time and space complexity, even as the data stream grows indefinitely. Also explain how to handle performance challenges when the data volume spikes.

Concurrency Perspective:

The core challenge lies in maintaining and updating the Top K results efficiently under high-concurrency data stream input. Since the “continuous inflow” of the stream essentially represents concurrent write operations, the design must use concurrency-safe data structures (e.g., locked priority queues or distributed hash tables) to prevent race conditions during multithreaded or multiprocess writes.

If using a min-heap for Top K, insertion and deletion operations must be thread-safe.

For large-scale streams requiring distributed processing, frequency counts must be aggregated across multiple nodes concurrently, ensuring accurate global Top K results while avoiding single-node overload and balancing concurrency efficiency with accuracy.

Inverted Index Implementation and Efficiency Optimization

Problem: Design an algorithm to continuously track the top K most frequent queries from an incoming data stream. The solution must remain efficient in both time and space complexity, even as the data stream grows indefinitely. Also explain how to handle performance challenges when the data volume spikes.

Concurrency Perspective:

The core challenge lies in maintaining and updating the Top K results efficiently under high-concurrency data stream input. Since the “continuous inflow” of the stream essentially represents concurrent write operations, the design must use concurrency-safe data structures (e.g., locked priority queues or distributed hash tables) to prevent race conditions during multithreaded or multiprocess writes.

If using a min-heap for Top K, insertion and deletion operations must be thread-safe.

For large-scale streams requiring distributed processing, frequency counts must be aggregated across multiple nodes concurrently, ensuring accurate global Top K results while avoiding single-node overload and balancing concurrency efficiency with accuracy.

Trie-based Autocomplete System

Problem: Implement an autocomplete system using a Trie data structure that returns real-time query suggestions as users type prefixes. The system should optimize Trie construction and query performance while handling high levels of concurrent queries.

Concurrency Perspective:

The concurrency challenge is enabling high-throughput concurrent Trie access when serving millions of simultaneous prefix queries.

Trie read operations must be thread-safe to avoid memory access conflicts under concurrent queries.

A read-write lock strategy allows multiple threads to read concurrently, while write operations (e.g., updating hot terms) temporarily block reads, balancing throughput and consistency.

To scale further, the Trie can be partitioned by prefix across nodes, distributing load and preventing overload on a single node, ensuring low-latency autocomplete responses under high concurrency.

Anthropic Concurrency Fundamentals and Concepts Interview Questions

GIL Impact on Multithreading/Multiprocessing

Problem: Explain the working principle of Python’s Global Interpreter Lock (GIL) and analyze its performance impact in concurrent scenarios such as multithreaded web crawlers and multiprocessing data processing. Compare the applicability of multithreading and multiprocessing in CPU-bound and I/O-bound tasks, and explain how to avoid performance bottlenecks caused by the GIL.

Concurrency Perspective:

The GIL is a fundamental concept in Python concurrency. Its essence is that it restricts multiple threads within the same process from executing Python bytecode simultaneously. As a result, multithreading cannot achieve true parallelism in CPU-bound tasks and can only use thread switching to take advantage of I/O idle time.

In I/O-bound scenarios (e.g., multithreaded crawlers), the GIL has minimal impact: when a thread blocks on I/O (such as network requests), it releases the GIL, allowing other threads to run, improving concurrency efficiency.

In CPU-bound scenarios (e.g., large-scale data computation), the GIL severely limits multithreading performance—sometimes making it worse than single-threaded execution. In such cases, multiprocessing (which bypasses the GIL and achieves true parallelism across processes) or coroutines (event loops within a single thread, reducing context-switching overhead) are better options.

Understanding the GIL is a prerequisite for designing effective Python concurrency strategies, as it directly influences the rationality of concurrency choices.

ConcurrentHashMap vs Hashtable

Problem: Compare the implementation principles of Java’s ConcurrentHashMap and Hashtable, and analyze their differences in concurrency safety, performance, and feature sets. Explain how to choose the appropriate concurrent hash table in high-concurrency scenarios (e.g., caching in distributed systems).

Concurrency Perspective:

Both are thread-safe hash tables, but their concurrency control strategies are fundamentally different, which directly affects performance under high concurrency.

Hashtable uses the synchronized keyword to lock entire methods, creating a global lock. When multiple threads attempt concurrent reads/writes, they all compete for the same lock, resulting in very low throughput.

ConcurrentHashMap (JDK 1.8+) uses a combination of arrays + linked lists/red-black trees + CAS + synchronized, applying locks at the bucket/node level. This allows multiple threads to operate on different buckets concurrently, significantly improving throughput.

In high-concurrency scenarios (e.g., distributed caches), ConcurrentHashMap is preferred when performance and frequent reads/writes are critical. Hashtable may still be suitable for legacy codebases or low-concurrency environments. Understanding the concurrency control mechanisms of both is key when selecting a high-concurrency data storage solution.

Data Consistency in Distributed Search Index

Problem: Explain the concept of data consistency in distributed search indexes (e.g., Elasticsearch sharded indexes). Describe how to ensure consistency during scenarios such as concurrent multi-node writes and node failures (e.g., eventual consistency vs. strong consistency). Compare the pros, cons, and applicable scenarios of different consistency strategies.

Concurrency Perspective:

The core issue is data synchronization and conflict resolution under concurrent multi-node operations.

When multiple nodes write to the index concurrently (e.g., multiple crawler nodes updating documents simultaneously), consistency protocols (e.g., Raft) are required to synchronize data across shard replicas and avoid corruption caused by race conditions.

During node failures, fast leader election and data recovery are necessary to maintain consistent query results across concurrent requests.

Choosing eventual consistency (Elasticsearch’s default) improves write throughput under concurrency, but queries may temporarily return stale data. Choosing strong consistency (e.g., enforced by distributed locks or synchronous replication) guarantees data accuracy but reduces concurrent performance. Understanding these trade-offs is central to designing concurrency strategies in distributed search systems, as it directly affects both availability and reliability.

FAQ

Is Linkjob AI undetectable during technical rounds?

It’s a desktop app with OS-level integration, so it only shows up on my own screen. Screen sharing and active tab detection can't see it at all. So it could keep staying undetectable during technical rounds.

Will Anthropic’s 2025 concurrency interview test knowledge of specific programming languages?

It focuses on language-agnostic concurrency principles first, but often expects use of Python. You can use other languages, but you may struggle to finish the tasks in time.

Are there 2025 updates to Anthropic’s concurrency interview format I should note?

Yes. 2025 brings more focus on practical coding exercises to solve real concurrency problems

See Also

My 2025 Anthropic Software Engineer Interview Process and Real Questions

My Step-by-Step Guide to Beat the Anthropic Interview Process in 2025

My Curated List of the Top 20 Real Anthropic Interview Questions

How I Turned Codesignal Anthropic Practice Into a Winning Routine in 2025