Top 20 Real Anthropic Interview Questions I Compiled for 2026

After experiencing the Anthropic hiring process firsthand, I realized just how difficult it is to pass so many interview rounds. Overall, I feel that Anthropic places a high value on original thinking and deep AI expertise.

Below, I will share 20 real interview questions from various categories. Some are from my own experience, while others were gathered from the accounts of other candidates during my preparation.

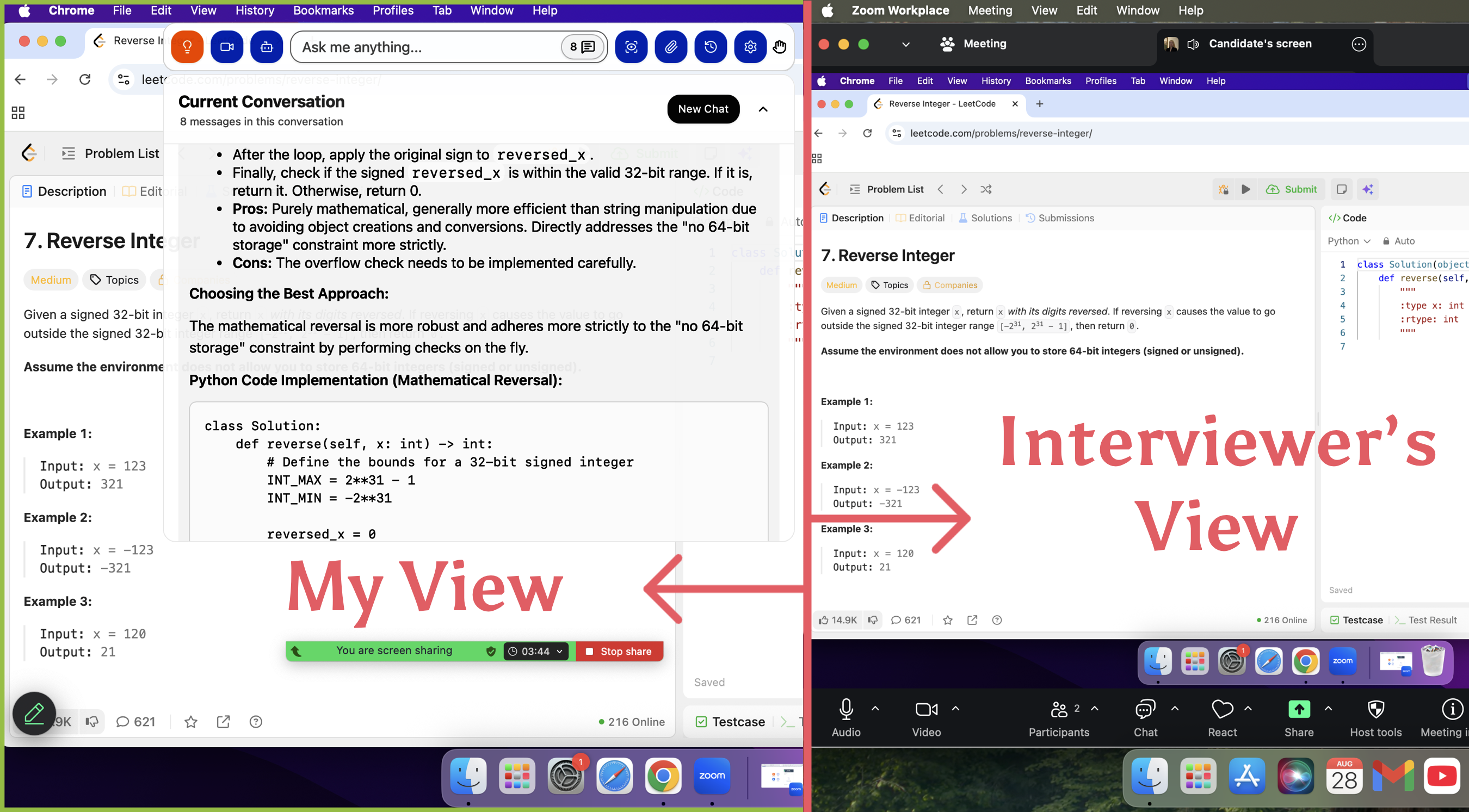

I’m really thankful to Linkjob AI for helping me get through my Anthropic interviews, which is why I’m sharing these 20 real questions here. Having an invisible AI interview assistant during the interview is indeed very convenient.

Anthropic Interview Questions

When I started preparing for Anthropic, I noticed their interview questions cover a wide range of topics. They want to see how I handle technical problems, AI concepts, agentic ai interview questions, and ethical scenarios. Here’s a quick table that helped me organize my study plan:

Category | Description | Examples / Focus Areas |

|---|---|---|

Technical | Coding, algorithms, system design, and scalable ai systems. | Debugging, architecture, data privacy, efficiency. |

AI-specific | Machine learning, prompt engineering, ai safety, and explainable ai. | Model fairness, Claude prompt design, ai research, explainable ai applications. |

Ethical/Behavioral | Ethics, responsibility, ai safety, and cultural fit. | Decision-making, ai safety reviews, ethical considerations, agentic ai concepts. |

Anthropic Technical Questions

How would you design a scalable architecture for deploying large language models in production?

I’d start with a modular, containerized architecture using a load balancer to manage traffic across horizontally scalable inference nodes. CI/CD pipelines would support version control and safe rollouts. To maintain low latency, I’d implement caching, request batching, and autoscaling. Reliability is supported through observability tools, failure isolation, and circuit breakers.

Describe a time you debugged a complex distributed system. What was your approach?

In a previous role, I diagnosed a bottleneck in a streaming pipeline. I started by reviewing logs, metrics, and distributed traces to identify the issue. After reproducing it in staging, I found misconfigured gRPC timeouts between services. I fixed the configs, added fallback mechanisms, and improved monitoring. I use a structured, tool-driven approach in all debugging.

How do you ensure data privacy and security when handling sensitive user data in AI applications?

I use layered security: end-to-end encryption, RBAC, audit logging, and minimal data retention. Where needed, I apply anonymization and design systems with transparency and explainability in mind. I also explore techniques like differential privacy or secure computation to reduce exposure during training and inference.

Explain the trade-offs between model accuracy and computational efficiency in real-world deployments.

In one recommendation system, I applied knowledge distillation to reduce model size and inference time while maintaining most of the original accuracy. Trade-offs depend on use case constraints like latency, cost, and reliability. I sometimes use hybrid strategies. For example, combining on-device models with cloud-based fallbacks to balance performance and efficiency.

Anthropic AI Skills Questions

How would you approach prompt engineering for Claude to maximize output relevance?

I treat prompt engineering like programming—an iterative cycle. I begin with clear, specific prompts, test the outputs, then analyze and refine the prompt’s wording, structure, and examples. This process continues until the outputs consistently meet the intended goals.

What are the key considerations for evaluating AI model fairness and bias?

I focus on ensuring data quality and diversity to cover different demographics and scenarios. I apply explainability techniques to identify sources of bias and assess the model’s decisions. Ethical implications are central, so transparency and continuous monitoring are key to mitigating bias.

Describe your process for conducting an AI safety review on a new model.

I start with a thorough risk assessment to identify possible harms, followed by stress testing and adversarial evaluations to check robustness. Throughout, I incorporate human oversight to detect unintended behaviors and ensure compliance with safety standards.

How do you stay updated with the latest advancements in AI research, and how do you apply them?

I read research papers regularly, engage with AI communities and conferences, and experiment with new techniques in sandbox environments. These insights are applied to optimize models and systems, improving both performance and alignment with user needs.

Linkjob AI worked great and the answers it generated were solid. I used it during the interview and it went undetected.

Anthropic Agentic AI Interview Questions

How would you design an agentic AI system that can autonomously adapt to new tasks?

I would use a modular architecture with dynamic task-switching capabilities, supported by persistent memory for context retention. Adaptation relies on self-monitoring loops, environment feedback, and clear subgoal decomposition. Explainable decision paths help track how the agent adjusts its strategy over time.

What are the ethical implications of deploying agentic AI in high-stakes environments?

Key concerns include unintended behavior, lack of accountability, and decision opacity. I prioritize transparency, continuous validation, and human oversight. Ethical deployment requires defining operational boundaries, enforcing constraints, and ensuring systems can be audited and corrected when needed.

Describe a scenario where agentic AI could fail. How would you mitigate the risks?

An agent might misinterpret vague instructions, forget critical context, or misuse tools. I would mitigate these risks through goal disambiguation, memory validation checks, and sandboxed tool use. Continuous monitoring and intervention hooks help catch failures early and adjust agent behavior in real time.

How do you balance autonomy and control in agentic AI systems?

I design with layered control: agents operate independently within defined limits but escalate complex or uncertain decisions. Human-in-the-loop mechanisms remain essential, especially for high-impact actions. Clear policies, auditing, and fallback behaviors help ensure safe and predictable operation.

Anthropic GenAI Interview Questions

How do you evaluate the creativity of a generative AI model?

I combine human evaluation with task-specific benchmarks and real-world performance. Creativity is assessed based on novelty, coherence, and utility within context. I also measure diversity across outputs and consistency with intent to ensure the model generates both original and relevant content.

What are the main challenges in preventing generative AI from producing harmful content?

Key challenges include filtering biased training data, handling adversarial prompts, and managing ambiguity in ethical boundaries. I rely on a combination of data curation, output monitoring, and clear policy constraints. Post-generation filtering and user feedback loops help refine behavior over time.

How would you fine-tune a generative model for a specific domain?

I begin by collecting high-quality, domain-specific data and aligning it with the model’s objective. The fine-tuning process includes pre-processing, model adaptation, and evaluation on relevant tasks. I validate with both automated metrics and expert review to ensure domain relevance and fluency.

Describe a method to improve the diversity of outputs in generative AI.

I use sampling techniques such as top-k, top-p (nucleus), or temperature adjustment to promote variability. I also vary prompts or input framing to explore different response modes. Careful tuning ensures that increased diversity doesn’t compromise coherence or task relevance.

Anthropic LLM Interview Questions

How do you handle hallucinations in large language models like Claude?

I use retrieval-augmented generation to ground responses in factual sources and reduce fabrication. I also incorporate confidence scoring, prompt constraints, and post-generation filtering. For high-stakes use cases, I include human review loops and track model performance against verified outputs.

What strategies would you use to optimize prompt design for LLMs?

I use an iterative workflow: start with a clear prompt, test output quality, and refine based on observed errors or inconsistencies. Techniques include few-shot examples, role specification, and instruction tuning. I document what works and build prompt templates for repeatable use.

Explain the importance of context window size in LLM performance.

Larger context windows allow models to maintain coherence across longer conversations or documents, reducing repetition and drift. However, they also increase compute cost and memory usage. I balance window size based on task needs, using summarization or chunking when efficiency is a concern.

How do you evaluate the ethical risks associated with deploying LLMs?

I assess potential risks around misinformation, privacy leakage, and bias amplification. My review includes data source audits, adversarial prompt testing, and explainability checks. I also consider downstream impacts and build safeguards to align model outputs with acceptable use policies.

Anthropic Interview Overview

Expectations for Candidates

Anthropic's interview process stands out from other companies. The questions go deeper and test more than just technical skills. Here’s what I noticed:

Anthropic sets high standards. Most candidates have advanced degrees or years of experience in ai and machine learning.

The process is tough. I faced technical coding challenges, system design problems, and ai safety scenarios.

They care about ai safety and ethics. I had to show I understood responsible ai development.

Anthropic wanted to see how I solved problems, not just whether I knew the answer. They expected me to demonstrate strong technical skills, like coding and system design. They also looked for clear communication and a genuine interest in AI. I made sure to research the company and ask thoughtful questions during my interviews. Anthropic also valued soft skills, including emotional intelligence, authentic communication, and storytelling.

Anthropic’s Values

Anthropic’s values shape every part of their interview process. They want to see originality and non-ai-assisted answers. Here’s what I found important:

Anthropic tells applicants not to use ai help in their applications. They want to see my real communication skills.

They look for independent thinking and emotional intelligence. These are things ai can’t do.

The company wants to find people who show passion and creativity. They believe ai can hide true human potential.

By banning ai use, Anthropic hopes to see my unique ideas and opinions.

I noticed that they focus heavily on AI safety, ethics, and human qualities, which makes the Anthropic interview process challenging. They want to know how I think, not just what I know. That’s why practicing with real AI interview questions helped me prepare for the unexpected.

Anthropic Interview Preparation Tips

Practice Strategies

I began by researching the company’s mission and values, which helped me align my answers with what Anthropic wanted. I set up mock interview sessions with friends or mentors. Practicing these interviews helped me get used to the pressure and the types of questions I might face.

I also reviewed technical concepts and coding techniques using online resources. I made sure to prepare questions for the interviewer, which demonstrated my interest in AI and agentic systems.

Here’s my go-to list for mastering Anthropic interviews:

Research Anthropic’s mission and values.

Review technical ai concepts and coding techniques.

Prepare thoughtful questions for the interviewer.

Use the STAR method to answer behavioral questions.

Build a strong portfolio with ai and agentic projects.

Dress confidently and show positive demeanor.

I also joined online tech communities like GitHub. These places helped me learn new AI techniques and keep my skills sharp. I practiced explaining complex AI and agentic concepts in simple language, which helped me communicate clearly during interviews.

Research Anthropic

Research is more than just reading the company website. I wanted to know how the interview process worked and what made Anthropic different. Here’s what helped me most:

I studied the interview structure. Anthropic uses an online coding test, a face-to-face coding session, and a virtual onsite with three parts: a research brainstorm, a take-home assignment using their API, and a culture fit session.

I practiced quick coding. Anthropic cares about speed and practical problem-solving, not just hard algorithms.

I explored Anthropic’s focus on AI safety and interpretability. The research brainstorm tested my creativity and understanding of these topics.

I got familiar with their products, especially Claude. I learned how it works and why it’s more private than other models.

I read about their reference check process. It includes written feedback and sometimes calls with my references.

I checked out research programs in alignment and interpretability. This gave me more context for the interview.

Communication

Anthropic values real, authentic communication. They want to hear my own voice:

During interviews, I focused on being clear and honest about my experiences and motivations.

Anthropic wants to see how I think and what I care about:

I shared personal stories and explained my interest in AI safety. This helped me stand out and showed I was a good fit.

Mindset

Anthropic looks for people who can work well in teams and care about the impact of AI. That's why I reminded myself to stay calm, listen carefully, and show my willingness to learn. I treated every question as a chance to show my passion and creativity.

Mindset Tips | Why It Matters |

|---|---|

Stay positive | Shows resilience and adaptability |

Be authentic | Builds trust with interviewers |

Embrace feedback | Helps you grow and improve |

Show curiosity | Demonstrates passion for learning |

FAQ

What makes Anthropic interviews different from other AI companies?

I noticed Anthropic interviews focus more on originality and ethics. They want to see my real thinking, not just technical skills. I had to show I understood AI safety and could explain my ideas clearly.

How can I handle unexpected questions during the interview?

I stay calm and take a moment to think. If I get stuck, I break the question into smaller parts. Practicing with Linkjob helped me get used to surprises and answer with confidence.

Should I use AI tools to prepare for Anthropic interviews?

I use AI tools for preparation, practice and feedback. Anthropic wants my own answers, so I never use AI to write responses during the real interview. Practicing on my own helps me sound authentic.

How do I show my passion for AI safety and ethics?

I share stories from my projects or studies. I talk about why AI safety matters to me. I ask thoughtful questions about Anthropic’s work. This shows I care about their mission.

See Also

My Step-by-Step Guide to Beat the Anthropic Interview Process in 2025

My Simple Steps to Ace the Anthropic Concurrency Interview in 2025

My 2025 Anthropic Software Engineer Interview Process and Real Questions

How I Turned Codesignal Anthropic Practice Into a Winning Routine in 2025