My 2025 Anthropic SWE Interview Experience and Questions

I recently completed my entire SWE interview process with Anthropic. My overall impression was that their interview format differed significantly from what is typically seen at traditional tech companies. They focused heavily on assessing practical skills over rote patterns. The questions across the rounds were very broad, and the follow-up questions were incredibly in-depth.

As a result, it was essential to have a systematic understanding of topics like system design, retrieval algorithms, distributed consistency, and performance optimization.

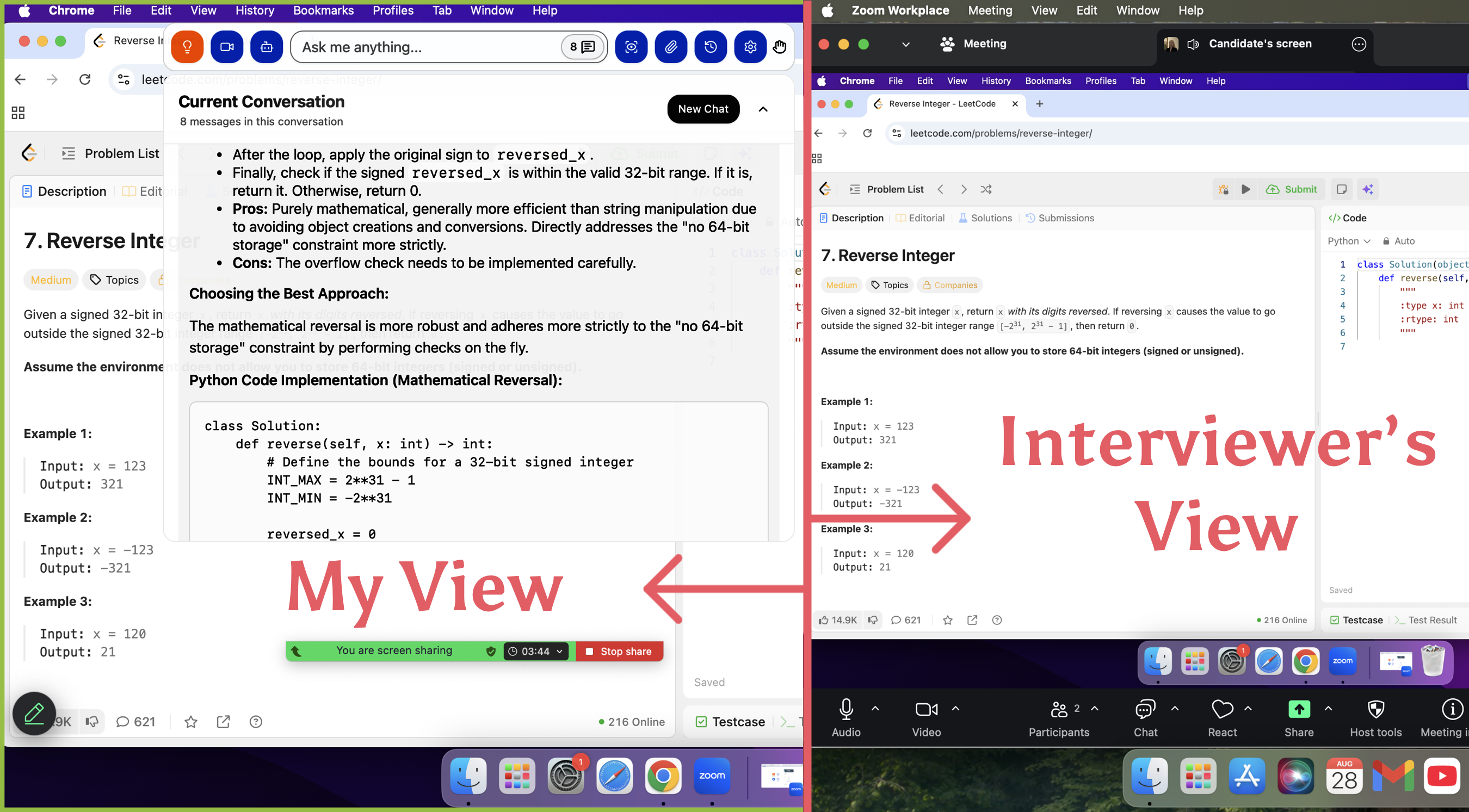

I am really grateful for the tool Linkjob.ai, and that's also why I'm sharing my entire interview experience here. Having an undetectable AI assistant during the interview is indeed very convenient.

Now, I’d like to share a detailed breakdown of my interview process and the specific questions I was asked in each round. I hope this helps others who are preparing.

Anthropic Software Engineer Interview Timeline

Here’s how my interview journey unfolded:

Recruiter Call (30 minutes)

OA (90 minutes)

Hiring Manager Call (1 hour)

Virtual Onsite (4 hours)

Anthropic Software Engineer Interview Stages

Recruiter Call

The discussion primarily centered on my background and motivations. The interviewer was particularly interested in gauging my passion for Anthropic and my grasp of their core principles. We also touched upon my career goals.

Online Assessment

This round was 90 minutes long, with one question divided into four levels. I had to complete the previous level and pass all unit tests to unlock the next one. This meant I had to constantly modify the existing code throughout the process.

I felt that each individual function wasn't difficult on its own, but with the four levels building on each other and the time needed to fully understand the prompt, the time felt a bit tight.

Here are the original questions I encountered:

Overall Task Description:

My task was to implement a simplified in-memory database, completing it sequentially according to the specifications for Level 1 through Level 4. Each level had to pass all tests before the next one could be unlocked. The system received a series of queries and ultimately output a string array containing the return values of all queries, with each query corresponding to one operation.

Level 1: Basic Record Operations

The foundational layer of the in-memory database contains records. Each record is accessed by a unique string identifier, or key. A record can contain multiple pairs of field and value (both field and value are of string type). It supports the following three types of operations:

SET <key> <field> <value>: Inserts a "field - value" pair into the record corresponding to the key. If the field already exists in the record, the old value is replaced with the new one. If the record does not exist, a new one is created. This operation returns an empty string.GET <key> <field>: Returns the value associated with the field in the record corresponding to the key. If the record or field does not exist, it returns an empty string.DELETE <key> <field>: Removes the field from the record corresponding to the key. If the field is successfully deleted, it returns"true". If the key or field does not exist, it returns"false".

Example

queries = [

["SET", "A", "B", "E"],

["SET", "A", "C", "F"],

["GET", "A", "B"],

["GET", "A", "D"],

["DELETE", "A", "B"],

["DELETE", "A", "D"]

]

The return values after execution are, in order: "" (SET operation), "" (SET operation), "E" (GET operation, retrieves the value of field B from record A), "" (GET operation, record A has no field D), "true" (DELETE operation, successfully deleted field B from record A), "false" (DELETE operation, record A has no field D).

Level 2: Displaying Record Fields Based on Filters

The database supports displaying data based on filters, with the addition of the following two operations:

SCAN <key>: Returns a string of fields for the record corresponding to the key, in the format"<field1>(<value1>), <field2>(<value2>), ..."(fields are sorted alphabetically). If the record does not exist, it returns an empty string.SCAN_BY_PREFIX <key> <prefix>: Returns a string of fields for the record corresponding to the key where the field names start with the specified prefix. The format is the same asSCAN(fields are sorted alphabetically).

Example

queries = [

["SET", "A", "BC", "E"],

["SET", "A", "BD", "F"],

["SET", "A", "C", "G"],

["SCAN_BY_PREFIX", "A", "B"],

["SCAN", "A"],

["SCAN_BY_PREFIX", "B", "B"]

]

The output after execution is ["", "", "", "BC(E), BD(F)", "BC(E), BD(F), C(G)", ""].

I had a great experience using Linkjob AI during the OA. It provided the logic, the full code, and helped with debugging. It went undetected, and I passed smoothly despite the tight time limit with its help.

Level 3: TTL (Time-to-Live) Configuration

The database now supports timelines for records and fields, as well as TTL settings. Timestamped versions of previous level operations have been added, and backward compatibility must be maintained. The new operations are as follows:

SET_AT <key> <field> <value> <timestamp>: Similar toSET, but with a specified operation timestamp. Returns an empty string.SET_AT_WITH_TTL <key> <field> <value> <timestamp> <ttl>: Similar toSET, but also sets a TTL (Time-to-Live) for the "field - value" pair. The pair is valid for the period[timestamp, timestamp + ttl). Returns an empty string.DELETE_AT <key> <field> <timestamp>: Similar toDELETE, but with a specified operation timestamp. If the field exists and is successfully deleted, it returns"true". If the key does not exist, it returns"false".GET_AT <key> <field> <timestamp>: Similar toGET, but with a specified operation timestamp.SCAN_AT <key> <timestamp>: Similar toSCAN, but with a specified operation timestamp.SCAN_BY_PREFIX_AT <key> <prefix> <timestamp>: Similar toSCAN_BY_PREFIX, but with a specified operation timestamp.

Example 1

queries = [

["SET_AT_WITH_TTL", "A", "BC", "E", "1", "9"],

["SET_AT_WITH_TTL", "A", "BC", "E", "5", "10"],

["SET_AT", "A", "BD", "F", "5"],

["SCAN_BY_PREFIX_AT", "A", "B", "14"],

["SCAN_BY_PREFIX_AT", "A", "B", "15"]

]

The output after execution is ["", "", "", "BC(E), BD(F)", "BD(F)"].

Example 2

queries = [

["SET_AT", "A", "B", "C", "1"],

["SET_AT_WITH_TTL", "X", "Y", "Z", "2", "15"],

["GET_AT", "X", "Y", "3"],

["SET_AT_WITH_TTL", "A", "D", "E", "4", "10"],

["SCAN_AT", "A", "13"],

["SCAN_AT", "X", "16"],

["SCAN_AT", "X", "17"],

["DELETE_AT", "X", "Y", "20"]

]

The output after execution is ["", "", "Z", "", "B(C), D(E)", "Y(Z)", "", "false"].

Level 4: File Compression and Decompression

This level supports file compression and decompression operations:

COMPRESS_FILE <userid> <name>: Compresses a file namednameowned by the useruserid. The name must not be that of a compressed file (i.e., it must not end with.compressed). The compressed file is renamed toname.compressedand its size becomes half of the original (file size is guaranteed to be an even number). The compressed file is owned byuserid, and the original file is removed. If the compression is successful, it returns a string of the user's remaining storage capacity; otherwise, it returns an empty string.DECOMPRESS_FILE <userid> <name>: Decompresses a compressed file namednameowned by the useruserid. The name must end with.compressed. The decompressed file reverts to its original name. If the user's storage capacity is exceeded after decompression, or if an uncompressed file with the same name already exists, the operation fails and returns an empty string. If successful, it returns a string of the user's remaining capacity.

Example

queries = [

["ADD_USER", "user1", "1000"],

["ADD_USER", "user2", "5000"],

["ADD_FILE_BY", "user1", "/dir/file.mp4", "500"],

["COMPRESS_FILE", "user2", "/dir/file.mp4"],

["COMPRESS_FILE", "user3", "/dir/file.mp4"],

["COMPRESS_FILE", "user1", "/folder/non_existing_file"],

["COMPRESS_FILE", "user1", "/dir/file.mp4"],

["GET_FILE_SIZE", "/dir/file.mp4.COMPRESSED"],

["GET_FILE_SIZE", "/dir/file.mp4"],

["COPY_FILE", "/dir/file.mp4.COMPRESSED", "/file.mp4.COMPRESSED"],

["ADD_FILE_BY", "user1", "/dir/file.mp4", "300"],

["DECOMPRESS_FILE", "user1", "/dir/file.mp4.COMPRESSED"],

["DECOMPRESS_FILE", "user2", "/dir/file.mp4.COMPRESSED"],

["DECOMPRESS_FILE", "user1", "/dir/file.mp4.COMPRESSED"],

["DECOMPRESS_FILE", "user1", "/file.mp4.COMPRESSED"]

]

The return value for each operation needs to be determined based on logical judgments such as "user existence, file ownership, storage capacity, and file existence," with the final output being the corresponding results.

Hiring Manager Call

This round was mostly a project deep dive and a deeply technical discussion. The hiring manager asked me many detailed questions, and I felt I needed to have a very deep understanding of the implementation details. With a little time left, we then went back to discussing the team's work.

Virtual Onsite

This stage consisted of four different interviews. By the end, I truly felt that Anthropic’s hiring bar was very high. They were deeply focused on my ability to solve practical, real-world problems.

They also had a habit of combining system design and algorithms into a single question and kept following up with edge-case scenarios in real business contexts. So just grinding LeetCode wasn’t enough for me. I also needed a deep, hands-on understanding of large-scale system design principles and optimization techniques. I had to think through the entire process, from theoretical concepts all the way to real-world implementation.

Large-Scale Distributed Systems Design

This round's core challenge was to design a distributed system to handle massive amounts of data. My task was to design a distributed search system capable of handling a billion documents and a million QPS, while also managing LLM inference for over 10,000 requests per second.

At first, I thought it would be a standard system design problem, but the interviewer kept digging into the details: How do you avoid hotspots? What's the most efficient way to merge and sort results across shards? They also pushed me on LLM-specific challenges like load balancing, auto-scaling, and GPU memory management. Every question drove me to think about production-level details, and there were several moments where I almost couldn't come up with an answer.

Advanced Search & Ranking Algorithms

The focus of this round was on designing a system that provides high-quality search results. The problem required me to design a Hybrid Search system that combines traditional text retrieval with semantic similarity.

I was asked how to find the top-k similar documents from a corpus of over 10M documents with a response time under 50ms. I mentioned the LSH (Locality-Sensitive Hashing) algorithm, but I wasn't skilled enough with the implementation details, especially regarding the choice of the hash function and optimization techniques. We also discussed how to normalize different scores, tune the alpha hyperparameter, and systematically evaluate search quality. There was a pitfall in every question, and it was easy to miss key details.

System Troubleshooting & Performance Debugging

This round was a classic "incident" response scenario and a deeply technical discussion. The interviewer threw me a problem: a system's 95th percentile latency had spiked from 100ms to 2000ms.

I had to quickly locate the bottleneck and prioritize optimization efforts while designing a full-stack monitoring system. The questions also extended to finding issues like race conditions and memory leaks in a buggy message queue system. This round was a huge test of my systematic thinking and hands-on experience; you really had to have a deep understanding of the underlying implementation to succeed.

Large-Scale Data Processing & Indexing

The final round centered on the starting point of the data pipeline: how to handle the ingestion and indexing of a billion documents. This topic was closely tied to designing a concurrent Web Crawler.

I had to consider how to build an efficient crawler that could handle robots.txt, rate limiting, and circular references, while ensuring the entire data pipeline was scalable, consistent, and fault-tolerant. The core challenge was guaranteeing data integrity and freshness, especially if a node were to suddenly go down. This stage was a comprehensive test of my complete system design knowledge, leaving no room for shortcuts.

Anthropic Software Engineer Interview Questions

When preparing for my interviews, I also compiled some experiences from others and combined them with the questions I was asked. I'm including them here to provide a more comprehensive overview.

Culture Fit

The cultural fit questions were primarily based on Anthropic's core values, with a significant focus on safety. The questions I was asked include:

Tell me about a time when you made a safety-related decision in a project.

Sample Answer: I worked on a new payment API for an e-commerce platform with a tight deadline. The original design used a cache to boost performance, but I identified a security risk: a data breach of the cache could expose sensitive user information.

I decided to prioritize safety over speed. I presented a detailed analysis of the risk to my team and proposed an alternative, more secure architecture that bypassed the cache.

The team agreed with my assessment. We adopted my solution, and the project launched successfully with no security issues. This experience taught me the importance of prioritizing user safety and security, even at the cost of immediate performance gains.

Can you describe your understanding of Anthropic's core mission and how it influences its approach to building AI systems?

Sample Answer: Anthropic's core mission is to build reliable, interpretable, and steerable AI systems. This mission is deeply rooted in a commitment to AI safety and ensuring that increasingly capable and sophisticated AI systems are aligned with human values and welfare. Unlike some other AI companies that might prioritize raw capability or rapid deployment above all else, Anthropic places a strong emphasis on understanding and controlling AI behavior to prevent unintended consequences.

How does Anthropic's focus on AI safety and interpretability differ from other major AI labs, and what are the system design implications of this focus?

Sample Answer: Anthropic's focus on AI safety and interpretability is a key differentiator from many other major AI labs. While most labs acknowledge the importance of safety, Anthropic has made it a central pillar of their identity and research from the outset.

Behavioral Interview

A particularly memorable question I was asked was about a time when a technical misjudgment led to a project delay. I shared a story about a failed algorithm optimization. The interviewer's follow-up question was about what lessons I learned from the experience.

While the question was quite broad, I felt this was an advantage. It allowed me to fill in more details about my technical abilities and soft skills that I wanted the interviewer to know. I was able to discuss some of the improvement plans and follow-up actions I had considered during my project post-mortem.

Here are some additional behavioral questions for reference:

What's your perspective on AI's risks?

What's your biggest concern about AI?

What would you do if, midway through a project, you realized it was actually unfeasible or couldn't be completed?

Anthropic Software Engineer Interview Strategies

Anthropic interviewers care about how I think and communicate, not just the final answer. I made sure to talk through my approach. If I got stuck, I explained my reasoning and asked clarifying questions. This helped me show my problem-solving process.

Anthropic Software Engineer Interview Answering Strategies

Here’s what worked for me:

I used real stories from my past projects. I described what I did, why I did it, and what I learned.

I avoided generic answers. Anthropic doesn’t want textbook responses. They want to see how I handle real challenges.

I focused on clear communication. I explained my code and design choices step by step. If I made a mistake, I owned it and corrected myself.

I showed genuine motivation for working at Anthropic. I talked about my interest in responsible AI and how I align with their mission.

I used the STAR method (Situation, Task, Action, Result) for behavioral questions. This helped me stay organized and concise.

Anthropic Software Engineer Interview Preparation Tips

Getting ready for the anthropic software engineer interview took more than just coding practice. I wanted to cover all my bases, so I built a routine that helped me feel confident and prepared.

Here’s my step-by-step approach:

Identify Key Skills

I started by reviewing the job description. I made a list of the technical skills Anthropic wanted, like Python, system design, and AI safety.Utilize Online Resources

I practiced coding problems on LeetCode. I also took Coursera courses to brush up on algorithms and data structures.Work on Real-World Projects

I contributed to open-source projects and built a small AI tool on my own. These experiences gave me stories to share during the interview.Stay Current

I read blogs, joined webinars, and attended meetups to keep up with industry trends.

FAQ

How is the Anthropic interview different from a typical FAANG-style interview?

The biggest difference is the shift from rote pattern recognition to practical, real-world problem-solving. While traditional interviews often test your ability to solve a single, isolated problem, Anthropic's questions are layered and have extensive follow-ups. You're not just expected to know a LeetCode pattern; you must understand the underlying principles and be able to apply them to complex, open-ended scenarios.

What technical areas should I focus on most when preparing?

Based on my experience, you should have a systematic and in-depth understanding of:

Large-Scale Distributed Systems: How to design systems for massive scale, including topics like sharding, load balancing, and fault tolerance.

Data Processing & Indexing: The full pipeline, from data ingestion (e.g., crawlers) to building efficient search indexes.

Performance & Optimization: How to profile, debug, and optimize a system, especially for issues like high latency or memory leaks.

AI/ML Concepts: You don't need to be a research scientist, but a solid grasp of concepts like LLM serving, GPU memory management, and modern search algorithms (e.g., hybrid search, semantic similarity) is crucial.

What's the key to succeeding in the multi-level coding challenges?

The main challenge is to write extensible and robust code from the start. Each new level adds complexity and requires you to modify your existing solution. Instead of just solving the first problem, you should think about how your design can adapt to future requirements. It's a test of your ability to write clean, modular code that can handle new features and edge cases without needing a complete rewrite.

See Also

My Step-by-Step Guide to Beat the Anthropic Interview Process in 2025

My Curated List of the Top 20 Real Anthropic Interview Questions for 2025 Applicants

How I Turned Codesignal Anthropic Practice Into a Winning Routine in 2025

My Simple Steps to Ace the Anthropic Concurrency Interview in 2025