My 2026 Cohere Interview Process and Actual Questions I Faced

Preparing for a 2026 Cohere interview can be quite stressful, as there aren’t many shared experiences online compared to big tech companies, leaving little to reference. However, in this article, I will detail my own Cohere interview process, breaking down each stage and sharing the real Cohere interview questions I encountered along the way.

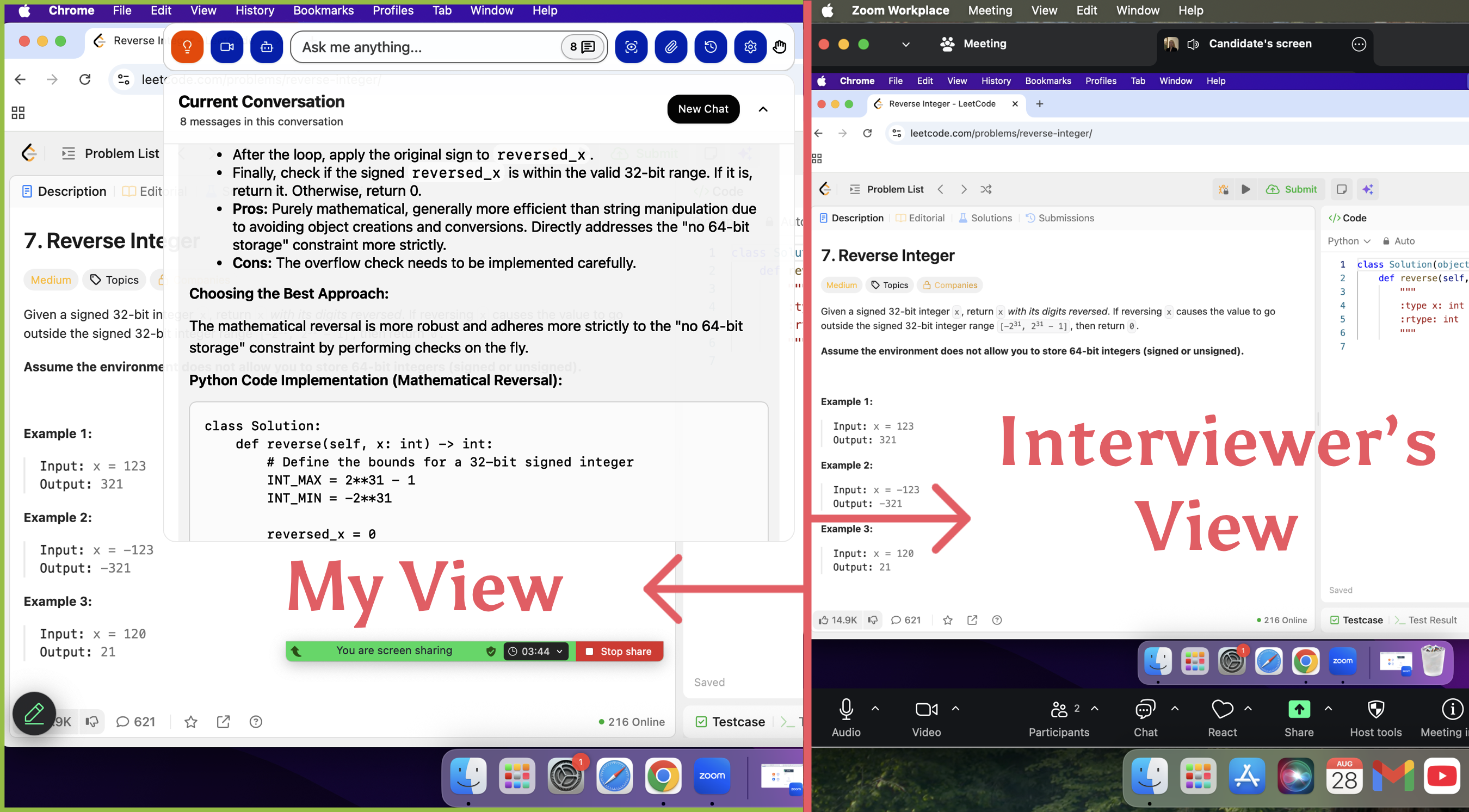

I’m really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my Cohere interview process and questions here. Having an undetectable AI coding interview copilot during the interview indeed provides a significant edge.

Cohere Interview Process and Questions

I applied the AI Engineer position at Cohere online, and the entire interview process lasted about four weeks. Overall, it was an intense but highly targeted process, with each stage assessing different skills, including technical ability, AI application, research capabilities, and behavioral fit. All fairly broad industry and LLM knowledge, continuing and narrowing on to more specific concepts as you continue.

HR Screen

The HR call was the first stage, primarily aimed at understanding my background and motivation:

They asked why I chose Cohere and my understanding of the company.

Went into detail about my past project experience, technical stack, and experience in artificial intelligence.

Also asked some behavioral questions, such as how I handle team conflicts, face challenges, and make decisions in projects.

This stage provided a clear view of the company culture and hiring expectations, while giving me a chance to demonstrate communication skills and professional motivation.

OA

The OA was the second stage, lasting 1 hour and containing three coding problems. This stage primarily tested algorithmic thinking, logical reasoning, and coding accuracy, requiring the solution to run correctly within the time limit. One problem I encountered:

Problem Description:

You are given a string S of length N which encodes a non-negative number V in a binary form. Two types of operations may be performed on it to modify its value:

if V is odd, subtract 1 from it;

if V is even, divide it by 2.

These operations are performed until the value of V becomes 0.

For example, if string S = "011100", its value V initially is 28. The value V would change as follows:

V = 28, which is even: divide by 2 to obtain 14;

V = 14, which is even: divide by 2 to obtain 7;

V = 7, which is odd: subtract 1 to obtain 6;

V = 6, which is even: divide by 2 to obtain 3;

V = 3, which is odd: subtract 1 to obtain 2;

V = 2, which is even: divide by 2 to obtain 1;

V = 1, which is odd: subtract 1 to obtain 0.

Seven operations were required to reduce the value of V to 0.

Write a function:

def solution(S)that, given a string S consisting of N characters containing a binary representation of the initial value V, returns the number of operations after which its value will become 0.

I used Linkjob AI for the online assessment part. The application worked great and I got through my interview without a hitch. It’s also undetectable, I used it and didn't trigger any detection.

Take-Home Assessment (Case Study)

The Take-Home Assessment was the third stage, with a 48-hour window. Key points:

Given one or two prompts, requiring demonstration of problem-solving thought process and exploratory analysis.

Focused on analytical thinking, engineering judgment, and communication skills: not just solving the problem, but explaining why a particular method was chosen and potential optimizations or limitations.

Could be presented as a notebook, report, or demo, highlighting clarity and explainability.

The difficulty was less about algorithms and more about organizing thoughts, presenting a clear solution, and producing high-quality analysis under time constraints.

VO

The VO was the core stage, consisting of multiple technical and ML-focused rounds:

Coding Round

Implemented top_k LLM token decoding and similar algorithms.

The style was collaborative, emphasizing discussion and problem-solving, not just code correctness.

ML Design

Design a mechanism for an LLM-based system that allows it to answer questions about events or knowledge that occurred after its training cutoff, while maintaining reliability and transparency. For example, the model should be able to:

Integrate real-time retrieval from trusted sources (RAG or web APIs).

Decide when to defer or clarify if the information is uncertain.

Explain to the user why it can or cannot answer a question.

Requirements:

Describe the system architecture and components (retrieval module, reasoning module, response validation).

Discuss trade-offs such as latency, accuracy, and hallucination prevention.

Consider user experience: how the model communicates uncertainty or cites sources.

Paper Reading & Deep Dive

This is where I struggled.

I prepared as if it were a reading group, explaining the paper to a non-expert audience, but the interviewers were already very familiar with the paper and skipped my slides.

They focused on paper limitations, experiment design, and applicability of results.

This highlighted the importance of research depth and critical analysis.

Hiring Manager / Behavioral Questions (HM BQ)

Standard behavioral questions, with an emphasis on applied LLM experience.

Evaluated communication, teamwork, and problem-solving in practical projects.

Cohere Interview Questions

Coding Round

Question 1:

Implement a function that takes a stream of strings and removes duplicates in real time, without storing the entire stream in memory.

Sample Answer (Python):

def unique_stream(stream):

seen = set()

for s in stream:

if s not in seen:

seen.add(s)

yield sQuestion 2:

Design a function that finds the longest substring without repeating characters in a given string.

Sample Answer (Python):

def longest_unique_substring(s):

char_index_map = {}

start = max_len = 0

for end, char in enumerate(s):

if char in char_index_map and char_index_map[char] >= start:

start = char_index_map[char] + 1

char_index_map[char] = end

max_len = max(max_len, end - start + 1)

return max_lenSystem Design Questions

Question 1:

Design a URL shortening service like bit.ly. Discuss the system components and how you would handle scalability.

Sample Answer:

Components:

Frontend: Accepts long URLs and returns shortened versions.

Backend: Maps long URLs to short codes and stores them in a database.

Database: Stores the mapping between short codes and long URLs.

Scalability Considerations:

Use a hashing algorithm to generate unique short codes.

Implement caching to reduce database load.

Use load balancing to distribute traffic evenly across servers.

Question 2:

Design a system to detect fraudulent transactions in real-time. What components would you include and how would you ensure low latency?

Sample Answer:

Components:

Data Ingestion: Collect transaction data in real-time.

Feature Extraction: Extract relevant features from the transaction data.

Model Inference: Use a machine learning model to predict fraud.

Alerting System: Notify relevant parties if fraud is detected.

Low Latency Considerations:

Use stream processing frameworks like Apache Kafka and Apache Flink.

Optimize model inference time.

Implement caching for frequently accessed data.

AI/ML-Specific Questions

Question 1:

How would you implement a system where an LLM answers questions about events that occurred after its training cutoff?

Sample Answer:

Approach:

Implement a retrieval-augmented generation (RAG) system.

Use a retriever to fetch relevant documents.

Use a generator to produce answers based on the retrieved documents.

Challenges:

Ensuring the retriever fetches relevant documents.

Handling conflicting information in the retrieved documents.

Maintaining the coherence and relevance of the generated answers.

Question 2:

You are building a batch inference pipeline for embedding a batch of sequences with a max token and max batch size limit. How would you optimize throughput?

Sample Answer:

Approach:

Split sequences into sub-batches that respect the max token and batch size limits.

Use asynchronous processing to process multiple sub-batches concurrently.

Implement caching for repeated sequences to reduce redundant computation.

Tools:

Use TensorFlow Serving or TorchServe for serving the model.

Use Apache Kafka for managing the data pipeline.

Behavioral Interview Questions

Question 1:

Tell me about a time you faced a major challenge in a project and how you overcame it.

Sample Answer:

In a previous project, our model training pipeline kept failing due to memory bottlenecks. I analyzed the workflow, identified unnecessary data duplication, and implemented a streaming data loader. This reduced memory usage by 40%, allowing the training to complete successfully on time.

Question 2:

Describe a situation where you had to collaborate with a team member who had a different approach than you.

Sample Answer:

During a multi-team project, a teammate preferred a heuristic-based solution while I advocated for a data-driven approach. We conducted a small-scale comparison experiment, evaluated the results, and ultimately combined both strategies to achieve a solution that was both efficient and accurate.

Overall Summary of Cohere Interview

The Cohere interview process reflects the company’s focus on AI research and application while maintaining a standard structure similar to other tech firms. Candidates typically go through a complete process, from Online Assessment (OA) to Virtual Onsite (VO), including coding, system design, and AI-specific challenges. Unlike some research-heavy companies, Cohere places a strong emphasis on whether candidates can apply AI technology to real-world product environments.

Cohere Company Culture

Cohere’s company culture emphasizes innovation, collaboration, and practical AI applications. At the core of their technology are large language models based on the Transformer architecture, trained on supercomputers to deliver natural language processing solutions. Their main product lines include embedding, summarization, semantic search, text generation, reranking, and classification, reflecting a strong focus on real-world AI applications.

During interviews, this focus on applied AI is evident: discussions often revolve around how to implement, optimize, or integrate these models into products rather than purely theoretical exercises. The interview environment encourages open problem-solving, collaborative discussion, and practical thinking, mirroring the company’s culture of building impactful AI tools while working closely with cross-functional teams.

Roles and Skills They Look For

Cohere primarily hires for the following key roles:

Software Engineer (SWE): Focused on building and optimizing the infrastructure and system architecture for large language models (LLMs), ensuring efficient performance in production environments.

AI Engineer: Responsible for applying Cohere’s LLMs to real-world scenarios, including text generation, semantic search, summarization, and other NLP functionalities, as well as optimizing these applications.

Research Scientist: Engages in cutting-edge machine learning and natural language processing research, exploring areas such as multilingual models, multimodal learning, and inference efficiency to drive AI innovation.

Platform Engineer: Develops and maintains Cohere’s AI platform, supporting model training, deployment, and inference services while ensuring stability and scalability.

Product Manager: Defines and drives product strategy, coordinates cross-functional collaboration, and ensures products meet user needs and deliver business value.

Sales Engineer: Works closely with clients to understand business requirements, provides technical support and solutions, and facilitates adoption of Cohere’s products.

The interviews for these roles typically focus on:

Technical Skills: Including programming, algorithm design, and system architecture.

AI Application Skills: How to apply LLM technology to solve real-world problems.

Research Abilities: Innovation and research capabilities in machine learning and NLP.

Cross-Team Collaboration: Communication and collaboration with different functional teams.

Overall Difficulty and Style

The overall difficulty of Cohere interviews is moderate to high, similar in style to other AI-focused companies like OpenAI and Anthropic. Key differences include:

Not about tricky algorithm puzzles: Emphasis is on real-world engineering problems, such as handling data streams or optimizing inference latency.

Practical style: Interviewers care more about whether you can propose feasible solutions rather than theoretical perfection.

AI-focused: For AI Engineer or Research Scientist roles, you are very likely to encounter questions specific to NLP or large language models.

In other words, Cohere interviews are not the hardest, but they expect candidates to balance coding skills, system design thinking, and AI application experience.

FAQ

Is Linkjob AI undetectable during technical rounds?

It’s a desktop app with OS-level integration, so it only shows up on my own screen. Screen sharing and active tab detection can't see it at all. So it could keep staying undetectable during technical rounds.

What tools did you use to prepare for the coding rounds?

Since the coding rounds are the most important part of the interview, I used a combination of traditional coding practice websites and AI mock interview platforms to comprehensively prepare for the types of problems that might appear. Ultimately, I mainly solved Python and SQL problems on LeetCode and practiced real interview questions on Linkjob.

What questions did you ask the interviewers?

I asked about their favorite projects, team culture, and how they use AI in real products. I wanted to know what challenges they face and what they enjoy most about working at Cohere.

See Also

My Secret Hacks For Beating HackerRank Proctoring Tools

My Secret Checklist to Pass 2025 OpenAI Coding Interview

My Experience of the Databricks New Grad Interview Process in 2025