My Insider Roadmap to Acing the Gemini Machine Learning Interview in 2025

Google’s Machine Learning interview is truly challenging. The questions are unique to Google, tough to answer, and cover a wide range of topics.

The good news is, with the right preparation, you can make a huge difference and land an ML job at Google. I’ve already organized everything you need in this article.

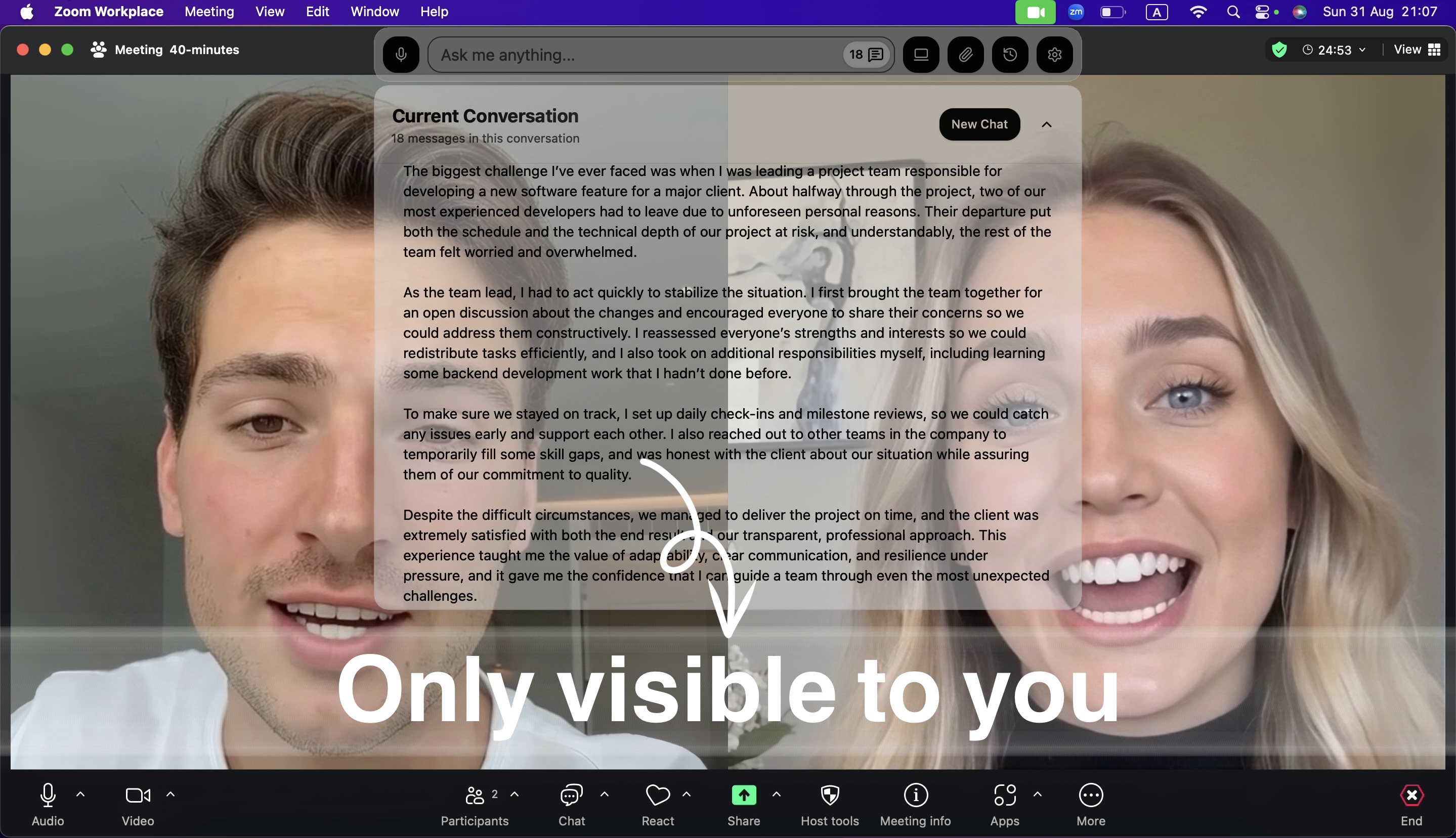

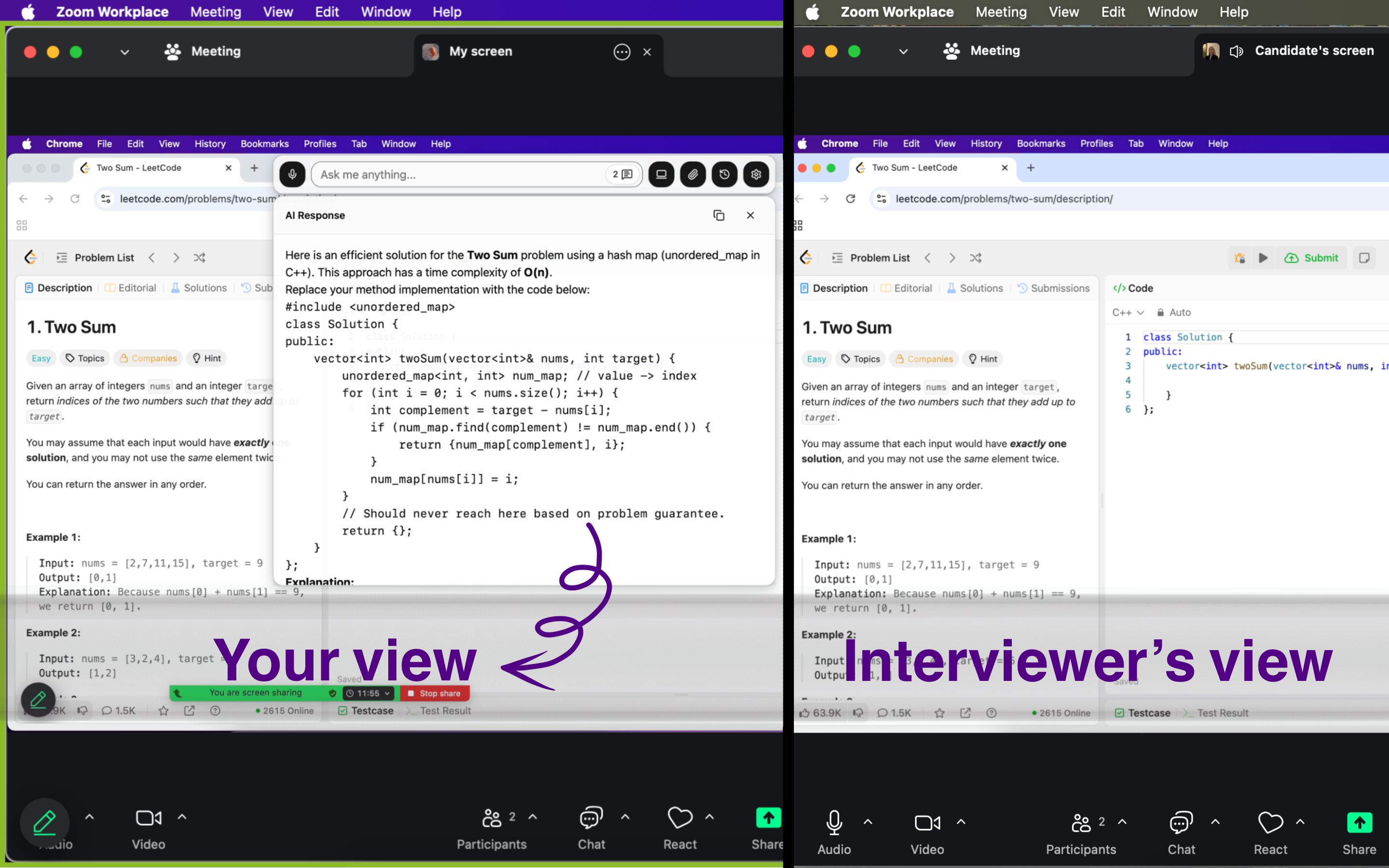

Thanks to Linkjob, I barely made it through the interview. When I ran into tough coding problems, I could screenshot them and let the AI help me answer. Plus, tests from my friends showed that during screen sharing, the answers were only visible to me, which made me feel much more at ease.

Gemini Machine Learning Interview Process

I transitioned from DS to MLE, and since I wasn’t very familiar with this role, I basically bet all my energy on perfecting every step of the process to maximize my chance of passing.

Because the JD mentioned “prefer candidates with multimodal model experience,” I added a self-learned Gemini API tuning project to my resume to align with the role. Later, I received an email from HR saying my resume had passed the screening, and I was scheduled for a Tech Screening five days later.

Tech Screening

The focus was mainly on coding and ML knowledge. I had prepared two weeks in advance by practicing LeetCode medium questions under the Google tag, and luckily, this preparation helped me clear the round.

Coding

The question was medium difficulty: binary tree level order traversal, requiring me to output the values of nodes at each level. I solved it using BFS.

ML fundamentals

One of the questions I was asked was:

“What’s the difference between model parallelism and data parallelism in Gemini’s distributed training?”

I answered that data parallelism splits batches, while model parallelism splits parameters. I explained that Gemini 175B uses hybrid parallelism, and even gave an example of tensor parallelism splitting linear layers. But since I had transitioned from DS, I felt like my answer was still missing some depth. Then, with the help of real-time suggestions from Linkjob, I added more details on distributed training.

Later, the interviewer gave me feedback saying my coding approach was very clear and even praised me for how well I supplemented the details on ML fundamentals.

Onsite

This stage consisted of two parts. The first part included a coding round (two questions, 45 minutes each) and a system design round (45 minutes). The second part was behavioral questions plus business case questions. Below are the questions I encountered.

Coding 1: Multimodal Data Processing

The task was to write a function that pads text sequences in a batch of multimodal data and resizes images. Text sequences were to be padded with 0 up to the longest sequence length, and images resized to a uniform 224×224 resolution.

Coding 2: Inference Optimization

Implement a simple KV cache for Gemini’s inference, using a dictionary to store key-value pairs to avoid redundant computation.

System Design

Design a model compression pipeline for Gemini Nano (mobile deployment), aiming for 10× compression with less than 5% accuracy loss. My advice for this round: focus on building a clear framework, fill in with fundamental methods, and don’t pretend to know unfamiliar technologies.

Behavioral Questions

These assess Google’s four core values: user focus, learning agility, collaboration, and bias for action. Fresh graduates can reference school projects, self-learning experiences, etc. In my round, I was asked: “How do you quickly learn a new technical skill?” (corresponding to learning agility), probably because the interviewer saw that I previously worked in DS.

Business Case Question

How would you detect harmful content in Gemini’s multimodal outputs?

I answered with three points:

Use pre-trained toxicity models (e.g., BERT-for-toxic) for text detection.

Use CNNs to detect violent or explicit content in images.

Perform cross-modal checks to ensure consistency between text and images (e.g., text says “safe” but the image contains violent content).

Team Match

After about a month of interviews, I finally received the results. HR gave positive feedback overall, and I moved on to the team matching stage.

Thankfully, my AI interview assistant’s answers weren’t visible during screen sharing. Without it, I probably would have failed the Technical Screening early on.

Gemini Machine Learning Interview Questions and Answers

Here are some categorized interview questions and strategies I gathered while preparing for my interview.

LLM Architecture & Training

Question 1: Transformer Architecture Optimization

Explain how you would optimize the attention mechanism in a Transformer model for processing sequences longer than 100K tokens. What are the computational and memory trade-offs?

Core Examination Points:

Attention complexity: O(n²) scaling problem

Solutions: Sparse attention, sliding window, hierarchical attention

Memory optimization: Gradient checkpointing, mixed precision training

Practical implementation considerations

In-depth Answer Key Points:

Linear Attention: Reduce attention complexity to O(n) using kernel methods

Flash Attention: Decrease HBM access through memory-efficient implementation

Ring Attention: Distributed long-sequence processing to support extremely long context

Trade-offs: Analysis of accuracy vs. efficiency and applicable scenarios of different methods

Question 2: Scaling Laws and Model Size

Google's Gemini models range from Nano to Ultra. How would you determine the optimal model size for a given computational budget and performance target?

Key Knowledge Points:

Chinchilla scaling laws: Compute-optimal training

Relationship between model size and training data

Considerations for inference cost

Performance saturation points

Practical Applications:

Budget allocation: Distribute compute between model parameters and training tokens

Pareto frontier: Identify the optimal balance between performance and efficiency

Deployment constraints: Consider latency, throughput, and memory requirements

Evaluation metrics: Performance trade-offs across different downstream tasks

Multimodal ML Questions

Question 1: Vision-Language Integration

Design the architecture for processing both text and images in Gemini. How would you handle the different modalities and ensure effective cross-modal understanding?

Architecture Design Key Points:

Tokenization strategy: How to convert visual information into tokens

Modality fusion: Early fusion vs. late fusion approaches

Attention mechanisms: Cross-modal attention design

Training objectives: Contrastive learning, masked modeling

In-depth Discussion:

Vision Encoder: ViT vs. CNN-based approaches

Alignment: How to align visual and textual representations

Efficiency: Computational challenges in processing high-resolution images

Generalization: Ensure model robustness across different visual domains

Question 2: Multimodal Training Data

How would you design a data pipeline for training a multimodal model like Gemini? What are the key challenges in handling diverse data types at scale?

Data Pipeline Considerations:

Data diversity: Balanced sampling of text, images, videos, and audio

Quality control: Quality assessment of multimodal data

Scalability: Petabyte-scale data processing

Privacy: Handling of sensitive multimodal content

Technical Implementation:

Distributed processing: Use Apache Beam and Dataflow for large-scale data processing

Format standardization: Unify data formats across different modalities

Deduplication: Cross-modal duplicate detection

Bias mitigation: Ensure fairness and representativeness of training data

System Design for Large Models

Question 1: Distributed Training Architecture

Design a distributed training system for a 100B+ parameter multimodal model. How would you handle model parallelism, data parallelism, and fault tolerance?

System Architecture Key Points:

Model Parallelism: Tensor parallelism, pipeline parallelism

Data Parallelism: Gradient synchronization strategies

Memory Management: Activation checkpointing, offloading

Fault Tolerance: Checkpointing, recovery mechanisms

Specific Implementation:

3D Parallelism: Combine data, tensor, and pipeline parallelism

ZeRO optimization: Memory-efficient training

Communication optimization: All-reduce, reduce-scatter patterns

Dynamic load balancing: Handle heterogeneous hardware

Question 2: Inference Optimization

How would you optimize Gemini for low-latency inference while maintaining quality? Discuss both model-level and system-level optimizations.

Model-level Optimization:

Quantization: INT8, FP16, dynamic quantization

Pruning: Structured vs. unstructured pruning

Distillation: Knowledge distillation to smaller models

Architecture modifications: MobileNet-style optimizations

System-level Optimization:

Batching strategies: Dynamic batching, continuous batching

Caching: KV-cache optimization, attention caching

Hardware acceleration: TPU, GPU optimization

Serving infrastructure: Model sharding, load balancing

Safety and Alignment

Question 1: AI Safety in Large Models

What safety measures would you implement in Gemini to prevent harmful outputs? How would you balance safety with model capability?

Safety Mechanisms:

Constitutional AI: Train models to follow principles

RLHF: Reinforcement learning from human feedback

Red teaming: Adversarial testing approaches

Content filtering: Real-time harmful content detection

Implementation Challenges:

Scalability: Maintain safety in large-scale deployment

Cultural sensitivity: Cross-cultural safety standards

Edge cases: Handle novel attack vectors

Performance trade-offs: Impact of safety measures on model capability

Question 2: Bias and Fairness

How would you detect and mitigate bias in a multimodal model like Gemini? What metrics would you use to evaluate fairness?

Bias Detection Methods:

Demographic parity: Output distribution across different groups

Equalized odds: Accuracy consistency across groups

Representation analysis: Demographic representation in training data

Intersectionality: Bias interaction across multiple identities

Mitigation Strategies:

Data augmentation: Increase data for underrepresented groups

Adversarial debiasing: Reduce bias during training

Post-processing: Bias correction during inference

Continuous monitoring: Bias tracking in production environments

Coding Questions - Algorithm and Data Structures

Question 1: Efficient Attention Computation

Implement a memory-efficient attention mechanism that can handle sequences up to 50K tokens. Optimize for both time and space complexity.

def efficient_attention(Q, K, V, chunk_size=1024):

"""

Memory-efficient attention using chunking

Q, K, V: [batch_size, seq_len, d_model]

"""

# Implementation key points:

# 1. Chunked computation to reduce memory

# 2. Gradient checkpointing

# 3. Mixed precision arithmetic

# 4. Sparse attention patterns

passGrading Key Points:

Correct understanding of computational bottlenecks in the attention mechanism

Implementation of memory-efficient chunking strategy

Consideration of numerical stability and precision issues

Discussion of trade-offs across different optimization techniques

Question 2: Multimodal Data Loader

Design and implement a data loader that can efficiently batch multimodal data (text + images) for training. Handle variable-length sequences and different image sizes.

class MultimodalDataLoader:

def __init__(self, dataset, batch_size, max_seq_len):

# Implementation key points:

# 1. Dynamic padding for variable-length sequences

# 2. Image preprocessing and batching

# 3. Memory-efficient data loading

# 4. GPU memory optimization

passTechnical Requirements:

Handle batching of variable-length text sequences

Implement an efficient image preprocessing pipeline

Consider GPU memory constraints

Optimize data loading throughput

Coding Questions - ML System Design

Question 1: Model Serving Architecture

Design a serving system for Gemini that can handle 1M+ requests per day with sub-second latency. Include auto-scaling, load balancing, and monitoring.

System Components:

Load Balancer: Request routing and traffic distribution

Model Servers: Containerized model serving instances

Caching Layer: Response caching and KV-cache management

Monitoring: Tracking of latency, throughput, and error rates

Scalability Considerations:

Horizontal scaling: Auto-scaling based on traffic patterns

Model sharding: Distribute large models across multiple instances

Batch optimization: Dynamic batching for throughput optimization

Resource management: GPU/TPU resource allocation

Question 2: A/B Testing Framework

Design an A/B testing framework for comparing different versions of Gemini. How would you handle statistical significance, bias, and long-term effects?

Framework Components:

Traffic splitting: Assignment of users to different model versions

Metrics collection: Collection of response quality, latency, and user satisfaction data

Statistical analysis: Significance testing, confidence intervals

Bias control: Randomization, stratification

Experiment Design:

Sample size calculation: Power analysis to detect meaningful differences

Experiment duration: Balance statistical power and business needs

Metric selection: Definition of primary and secondary metrics

Long-term tracking: Monitoring for delayed effects

Behavioral Questions - Leadership and Collaboration

Question 1: Cross-functional Collaboration

Tell me about a time you had to work with researchers, product managers, and engineers on a complex ML project. How did you ensure alignment and manage conflicting priorities?

STAR Answer Framework:

Situation: Describe the context of the complex multimodal project

Task: Clarify your role and responsibilities

Action: Detail specific collaboration strategies and communication methods

Result: Demonstrate successful outcomes and lessons learned

Question 2: Technical Leadership

Describe a situation where you had to make a critical technical decision that affected the entire team. How did you gather input and communicate your decision?

Key Points:

Decision-making process: How to collect and analyze technical options

Stakeholder management: Balance concerns of different team members

Risk assessment: Evaluate potential impacts of technical decisions

Communication: Clearly explain complex technical concepts

Behavioral Questions - Problem Solving and Innovation

Question 1: Handling Ambiguity

Google's AI research often involves exploring uncharted territory. Tell me about a time you had to work on a project with unclear requirements or uncertain outcomes.

Answer Key Points:

Ambiguity tolerance: How to remain productive amid uncertainty

Iterative approach: Gradually clarify requirements through experimentation

Risk management: Balance exploration and practical constraints

Learning mindset: Learn from failures and unexpected results

Question 2: Innovation and Impact

Describe a technical innovation you've contributed to that had significant impact. How did you identify the opportunity and drive adoption?

Impact Demonstration:

Problem identification: How to recognize improvement opportunities

Solution development: Development process of the technical innovation

Adoption strategy: How to convince others to adopt your solution

Measurable impact: Quantified results and long-term benefits

Gemini Machine Learning Interview Preparation Tips

Technical Preparation

Core Knowledge Areas:

Transformer Architecture: Attention mechanisms, positional encoding, layer normalization

Large-scale Training: Distributed training, gradient synchronization, memory optimization

Multimodal ML: Vision-language models, cross-modal attention, alignment techniques

Model Optimization: Quantization, pruning, distillation, efficient architectures

AI Safety: RLHF, constitutional AI, bias detection, fairness metrics

Practical Project Suggestions:

Implement a mini-Transformer from scratch

Design a multimodal data processing pipeline

Optimize inference latency of large models

Experiment with different attention mechanisms

Build a model serving system

System Design Preparation

Key Areas:

Distributed Systems: Consensus algorithms, fault tolerance, scalability

ML Infrastructure: Model serving, monitoring, A/B testing

Data Engineering: Large-scale data processing, ETL pipelines

Cloud Platforms: GCP services, Kubernetes, containerization

Practice Suggestions:

Design end-to-end ML systems

Analyze real-world system architectures

Practice capacity planning and resource estimation

Learn industry best practices

Behavioral Interview Preparation

Google Culture Key Points:

Innovation: Pursue breakthrough solutions

Collaboration: Cross-functional teamwork

User Focus: Center on user needs

Technical Excellence: High standards of technical quality

Story Preparation:

Prepare 3-5 detailed project stories

Organize answers using the STAR method

Emphasize impact and learning

Demonstrate a growth mindset

FAQ

What surprised you the most about the Gemini Machine Learning interview

Honestly, it was how much emphasis was placed on cross-functional thinking—not just coding or ML fundamentals. I realized interviewers cared about whether I could connect multimodal research with practical product impact, not only solve algorithmic puzzles.

What common mistakes do candidates make in this interview?

One mistake I’ve seen (and almost made) is pretending to understand niche technologies like advanced quantization or obscure distributed frameworks. Interviewers spot that instantly. Another is over-investing in LeetCode while neglecting system-level ML design, which matters more in Gemini interviews.