Anthropic Coding Interview: My 2026 Question Bank Collection

Before Anthropic's coding round, you’ll receive a scheduling email from the recruiter. The email includes a prompt that looks like this: "This will be a pure programming problem solving interview which doesn't benefit from memorizing standard algorithms or data structures. You can choose to work in your preferred programming language."

While preparing for my Software Engineer interview, I did some research and discovered that Anthropic has a very small question bank—only six questions, with each prompt corresponding to a specific problem. As it turns out, advance preparation really pays off. I was asked the first question, which is also the most frequently tested one.

Below, I’ve organized the prompts, problem descriptions, and some follow-up questions for all six tasks. Note that for Question 2, there are "new" and "old" versions; the new one was introduced after September 2025, while the old one was about "deduplicate files." Also, Anthropic has moved away from Replit (perhaps due to its poor user experience). My interview was conducted on CodeSignal, and I was required to run my code.

I am really grateful to Linkjob.ai for helping me pass my interview, which is why I’m sharing my interview experience and the interview questions I've collected here. Having an AI interview helper indeed provides a significant edge.

Anthropic Coding Question 1: Web Crawler

Prompt

We're interested in seeing you interact in a real work environment, so your interviews may be more open-ended than standard technical problems, and you should feel empowered to engage with your interviewer and ask questions. For this interview, you should be familiar with handling concurrency, using primitives/libraries of your choice.

Problem Description

I was asked to implement a web crawler in Python. The core logic is essentially a BFS—starting from a single seed URL, I had to discover and crawl all links belonging to the same domain.

The interviewer provided a helper function called get_urls(url), which already handles the HTTP requests and basic parsing to extract links, so I didn't have to worry about the low-level scraping. I was asked to implement a synchronous version first. Once that was working, I had to optimize it into a multithreaded/async version.

Key Details to Note:

Domain Constraint: I only needed to process URLs that shared the same domain as the seed URL.

Filtering: I had to include a filter section in the logic to handle deduplication and manage which URLs to visit.

URL Handling: I needed to account for fragments (the part after the #), but I didn't have to worry about complex URL normalization.

Execution: They let me use my own IDE, but the final code had to be pasted into CodeSignal and run. As long as the test result showed the number of crawled URLs was under 100, it was considered correct.

Follow-up

Follow-up 1: Optimization (multi-threading)

Follow-up 2: Using ThreadPoolExecutor and urlparse for URLs

Follow-up 3: How to process a task immediately once it's ready

Follow-up 4: Threads vs. Processes: differences and use cases

Follow-up 5: Politeness policy (avoiding server overload)

Follow-up 6: Distributed system design (handling millions of URLs)

Follow-up 7: Detecting and handling duplicate content across different URLs

Suggestion

For I/O bound tasks like network or disk operations, you can use coroutines (async/await).

Coroutines provide lightweight concurrency on a single thread using an event loop.

But be careful: check if the underlying APIs are blocking calls. If they are, they’ll block the event loop and kill the concurrency.

Multi-threading helps with both I/O and CPU-bound tasks (on multi-core systems), but in Python, you have to consider the GIL.

Pro-tip: I suggest just using ThreadPoolExecutor for multi-threading. Don't bother writing your own async parser.

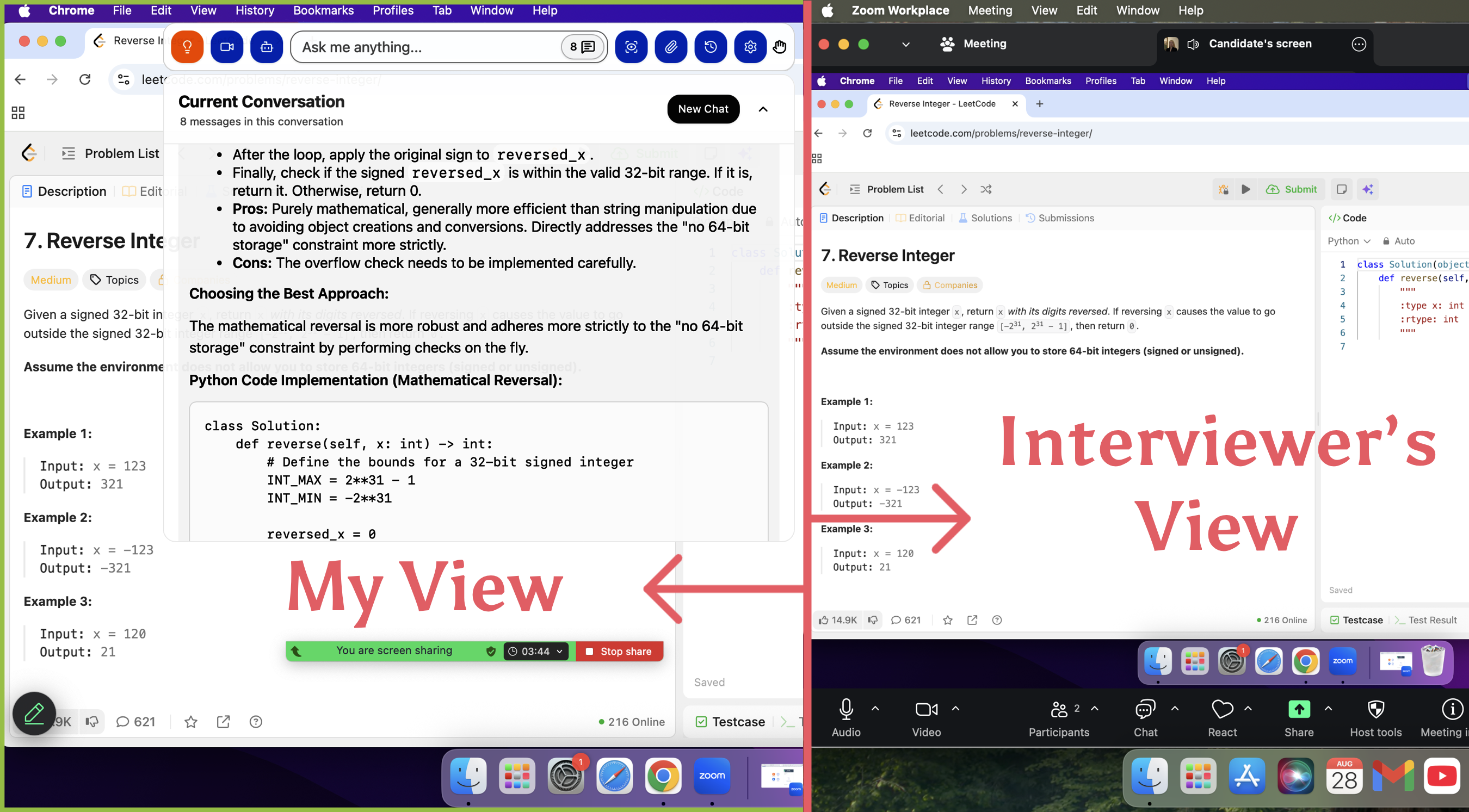

Linkjob AI worked great and I got through my interview without a hitch. It can solve both coding problems and follow-up questions, and it is undetectable. When sharing the screen, the interviewer cannot see it.

Anthropic Coding Question 2: LRU Cache

Prompt

We're primarily interested in seeing how you write code and approach problems. Feel free to ask questions and engage with your interviewer throughout the process. We'll provide you with a template containing the interview problem. You'll work directly in CodeSignal during the interview

Question

This problem provides an already implemented in-memory cache and requires further extensions based on the basic functionality.

The first question is not strictly an extension but rather finding a bug. As long as I quickly understand the basic functions of this cache, I can find the problem at the generate cache key step very quickly.

The second question is to support a durable cache, requiring that if the cache crashes, it can be restarted and must ensure no data loss. The basic idea is still to store it to disk and restore all data from the disk file when the cache restarts after a crash.

Follow-up

Follow-up: cpu bound vs io bound, how to implement in distributed system?

Suggestion

I used python, one pitfall in the first question is how to read args and convert them into a hashable key, I suggest checking out kwargs.

Anthropic Coding Question 3: Stack Trace

Prompt

This will be a pure programming problem solving interview which doesn't benefit from memorizing standard algorithms or data structures.

Question

Problem: Converting stack samples to a trace.

Sampling profilers are a performance analysis tool for finding the slow parts of your code by periodically sampling the entire call stack (lots of code might run between samples). In our problem the samples will be a list of

Samples of a float timestamp and a list of function names, in order by timestamp, like this:

struct Sample {

double ts;

std::vector<std::string> stack;

};Sometimes it's nice to visualize these samples on a chronological timeline of the call stack using a trace visualizer UI. To do this we need to convert the samples into a list of start and end events for each function call. The events should be in a list order such that a nested function call's end event is before the enclosing call's end event. Assume call frames in the last sample haven't finished. The resulting events should use the Event type:

struct Event {

std::string kind;

double ts;

std::string name;

};std::vector<Event> convertToTrace(const std::vector<Sample> &samples) {

// TODO for you: actually convert the samples list to events

events.push_back(Event{"start", 1.0, "main"});

events.push_back(Event{"start", 2.5, "func1"});

events.push_back(Event{"end", 3.1, "func1"});

return events;

}

int main() {

Sample s1{1.0, {"main"}};

Sample s2{2.5, {"main", "func1"}};

Sample s3{3.1, {"main"}};

std::vector<Sample> samples = {s1, s2, s3};

auto events = convertToTrace(samples);

for (const auto &e : events) {

std::cout << e.kind << " " << e.ts << " " << e.name << "\n";

}

cout << "code end" << endl;

return 0;

}Then, write some test cases. I think there are a few points that need attention: first, how to handle cases where two stacks are identical; second, the scenario involving recursive calls; and finally, the fact that if multiple functions end at the same time, they need to be printed in reverse order because the inner functions terminate first. For example, if the start order is f1, f2, f3, the end order should be f3, f2, f1.

Sample s2{1, {"main"}};

Sample s3{2, {"main", "f1", "f2", "f3"}};

Sample s4{3, {"main"}};Follow-up

Follow-up 1: Since the initial implementation used prefix comparison, the interviewer asked how to handle the logic using postfix comparison.

Follow-up 2: Identify traces that appear consecutively more than N times.

Follow-up 3: Trace de-noising. My approach was to record timestamps: a function call is only added to the results if the duration from "start of current function call to current time" exceeds N. The logic required a significant amount of code and needed to pass two simple test cases. The interviewer was very nice; I finished writing and testing exactly as time ran out.

Follow-up 4: Given a value N, do not generate corresponding events for any function calls that appear consecutively fewer than N times.

Suggestion

We need to pay special attention to recursive calls. For instance, in the sample {0.0, {"a", "b", "a", "c"}}, the function 'a' appearing twice cannot be treated as the same instance. We must differentiate between them based on their depth or position in the stack.

Anthropic Coding Question 4: Distributed Finding Mode and Median

Prompt

You should be prepared to think about efficiency in distributed systems, including parallel computation and network efficiency. You should be familiar with common patterns like map-reduce.

Question

Given a very large dataset and an array of machines, the task is to properly assign workloads to find the mode.

In this interview, the focus isn't just on the correctness of the code, but rather on your ability to continuously iterate and optimize a workable solution for better efficiency and shorter execution time. The setup involves 10 nodes with three available primitives: send, recv, and barrier. The constraints are: reading data at 10 bytes/sec, and send/recv at 1 byte/sec.

My takeaway is that 'find median' is highly unlikely to be tested in this format, so I would suggest others focus their preparation entirely on 'find mode.' I proposed three solutions, and interestingly, the naive approach (calculating local counters on each node and aggregating them to Node 0) performed the fastest. However, since the naive solution is clearly not the 'optimal' answer the interviewers are looking for, I felt the session didn't go as well as I had hoped.

Follow-up

Follow-up: find the median for the same dataset

Suggestion

Keep in mind that reading the materials and the send/receive process both have latency.

Anthropic Coding Question 5: Profiler Trace

Prompt

This will be a standard LeetCode style interview. We recommend Google Colab, but you’re welcome to use whatever environment you feel most comfortable with.

Question

Could not understand the interviewer for 30 min. then spent 10 min understanding the problem. Got barely 10-15 min to figure out and solve the problem.

Problem: Converting stack samples to a trace

Sampling profilers are a performance analysis tool for finding the slow parts of your code by periodically sampling the entire call stack.

In our problem the samples will be a list of Samples of a float timestamp and a list of function names.

Sample stacks contain every function that is currently executing.

The stacks are in order from outermost function (like "main") to the innermost currently executing function.

An unlimited amount of execution/change can happen between samples. We don't have all the function calls that happened, just some samples we want to visualize.

example:

s2 = [

Sample(0.0, ['a','b','a','c']),# a -> b -> a -> c

Sample(1.0, ['a','a','b','c']),

]

# (s, a) (s, b) (s, a), (s, c)

# (e, c) (e, a), (e, b), (s, a), (s, b), (s, c)

.. where "s" is start and "e" is end.Follow-up

Identify functions or calls that occur continuously N times or over a period of time t.

Anthropic Coding Question 6: Tokenization

Prompt

We're interested in seeing you interact in a real work environment, so your interviews may be more open-ended than a standard technical problems, and you should feel empowered to engage with your interviewer and ask questions. This won’t require any specialized knowledge or prep beyond general familiarity with reading and writing code.

Question

Part 1 was all about code review. They gave me a tokenize and a detokenize method and asked me to figure out what was going on under the hood. After I got a handle on the logic, I had to point out what was actually wrong with that specific tokenization approach. Here is roughly what the code looked like:

def tokenize(text: str, vocab: dict):

tokens = []

key = ""

for i in range(len(text)):

key += text[i]

if key in vocab:

tokens.append(vocab[key])

key = ""

return tokens

def detokenize(tokens, vocab: dict):

text = ""

reversed_vocab = {value: key for key, value in vocab.items()}

for token in tokens:

text += reversed_vocab[token]

return text

vocab = {

"a": 1,

"b": 2,

"cd": 3

}

token = tokenize("acdebe", vocab)

detokenize(token, vocab)This part felt like a pretty basic text preprocessing task, just turning text into code. I was a bit tripped up while reading the prompt—like, if both the text and vocab are inputs, how is the vocab even decided? The interviewer just said they could be anything. I mentioned that the code fails if the vocab can't cover all the characters in the text, and then we moved on.

The next part was a code review. They showed a new version of the code that added a check after the loop: if a key is missing from the vocab, it appends -1 (using vocab.get("UNK", -1)) and returns the tokens. They also added an 'UNK' key to the vocab list.

UNK:-1vocab = {

"a": 1,

"b": 2,

"cd": 3,

"UNK": -1

}This made me even more confused. The interviewer told me to treat it like a standard code review and provide any comments I had. I noted several points, such as:

What happens if the literal string 'UNK' actually appears in the input text?

What is the specific goal of this change, and what is the expected behavior?

If this is how we handle characters missing from the vocab, then the tokenization still fails for a case like 'xabc' (if 'x' is missing).

Matching shorter tokens first seems inefficient. For example, if the vocab contains the full text 'xxx', it would be better to match that directly and return. (Post-interview, I realized this scenario might be unlikely because the goal of tokenization is to represent the input as efficiently as possible, but as a pure algorithm problem, it’s a valid concern. I think I lacked some of the necessary background knowledge here.)

The interviewer then said that was enough and we moved on. The final task was to implement tokenize myself, specifically to handle cases where characters in the text aren't covered by the vocab keys, while ensuring that tokenize and detokenize still work. By this point, my confusion had peaked, and I performed quite poorly—mainly because I didn't understand the ultimate purpose of this specific tokenization logic. After doing some research post-interview, I realized a simple else block was likely all that was needed:

def tokenize(text: str, vocab: dict):

tokens = []

key = ""

for i in range(len(text)):

key += text[i]

if key in vocab:

tokens.append(vocab[key])

key = ""

else:

if not any(k.startswith(key) for k in vocab.keys()):

# if not, treat it as an unknown token

tokens.append(vocab.get("UNK", -1))

key = ""

print(tokens)

return tokensAnthropic Coding Interview Key Insights

Since the Anthropic coding bank only consists of these six problems, most candidates already know them before the interview, leaving virtually zero room for error. My advice is to prepare every question thoroughly, especially the follow-ups, as interviewers will definitely dig deeper.

Even if you nail all the questions, getting an offer still involves a bit of luck because the candidate pipeline is extremely crowded. Sometimes you might feel you performed well, but how the interviewer writes their feedback remains a wild card. Role alignment is also crucial—it often comes down to the Hiring Manager's (HM) personal preference and whether your background clicks with them. These are all factors beyond your control.

Everyone I met during the interviews was very nice, so don't feel pressured or anxious during the conversation.

The technical questions are unlikely to change in the short term and are essentially public info now. Therefore, the real differentiators are likely the Culture Fit and HM rounds, so make sure to prepare for those extensively.

If you’re aiming for Anthropic, apply as early as possible. If you're cold-applying, put effort into your essay, or try to get a referral. I have a feeling they will develop a new set of interview problems before long.

FAQ

What should I do if I get stuck on a question?

I will discuss my current thoughts with the interviewer, which is much better than doing nothing at all. If I really can’t come up with a solution or if time is running out, I’ll use Linkjob AI to help me solve the problem since the interviewer won't be able to see it.

Does the Anthropic coding interview require screen sharing and turning on the camera?

This is what it says in the email from Anthropic, for your reference:

Note that use of AI tools during this interview is not permitted, however Google, Stack Overflow, etc. is allowed. We may ask you to share your screen in Google Meet. People often run into browser permission issues, so we recommend preparing before the interview by starting a google meet with yourself and sharing the screen as a test.

How soon can I expect a follow-up?

If there’s no word after a week, it usually means you didn't get it.

See Also

How I Successfully Navigated My 2025 Anthropic Interview

Insights From My 2025 xAI CodeSignal Assessment Experience

My 2025 Anthropic SWE Interview Experience and Questions